ASK OCE — August 4, 2006 — Vol. 1 Issue 11

When accidents happen in organizations like NASA, engineers first look to the chain of events to isolate the technical problem. What if an accident stems from a systems failure or human error?

After studying technical risk and safety for more than two decades, Nancy Leveson of the Massachusetts Institute of Technology (MIT) is convinced that a leading cause of accidents doesn’t show up in a fault tree analysis, failure modes effect analysis, or probabilistic risk assessment.

“Risk increases slowly, and nobody notices,” Leveson says, likening it to the “boiled frog” phenomenon. “Confidence and complacency increase at the same time as risk.”

As project teams go about their work with a certain known level of risk, they tend to discount the risk with the passage of time if nothing goes wrong. They may even cut corners in the belief that perhaps they overestimated the risk in the first place. The result is an accumulation of risk as a project progresses through its lifecycle.

Leveson, whose recent work with NASA has been partially funded through the Center for Project Management (CPMR), advocates for the adoption of a systems theory of accidents. “Accidents arise from complex interactions among humans, machines, and the environment,” she says. “A system is not a static design.”

Her work draws heavily on control theory, which she believes offers the key to understanding accidents. “Accidents are a control problem,” she says. “To understand accidents, we need to examine the control structure itself to determine why it’s inadequate to maintain safety constraints, and why events occurred.”

To examine the potential for accidents and the scenarios involved, Leveson and her colleagues at MIT have developed system dynamics models of the NASA manned space program and performed a risk analysis of the technical authority organizational structure created after the Columbia accident. Because the models are formal and have a mathematical foundation, they can be used to evaluate policy decisions and organizational designs, to identify leading indicators of increasing risk, and to detect drift toward states of unacceptably high technical risk.

NASA has already begun to recognize the real-world relevance of Leveson’s CPMR-funded research: she is currently working with the Exploration Systems Mission Directorate (ESMD) to develop risk management models.

Read more about Nancy Leveson and her work.

In This Issue

Message from the Chief Engineer

A View From Outside: China Shoots for the Moon

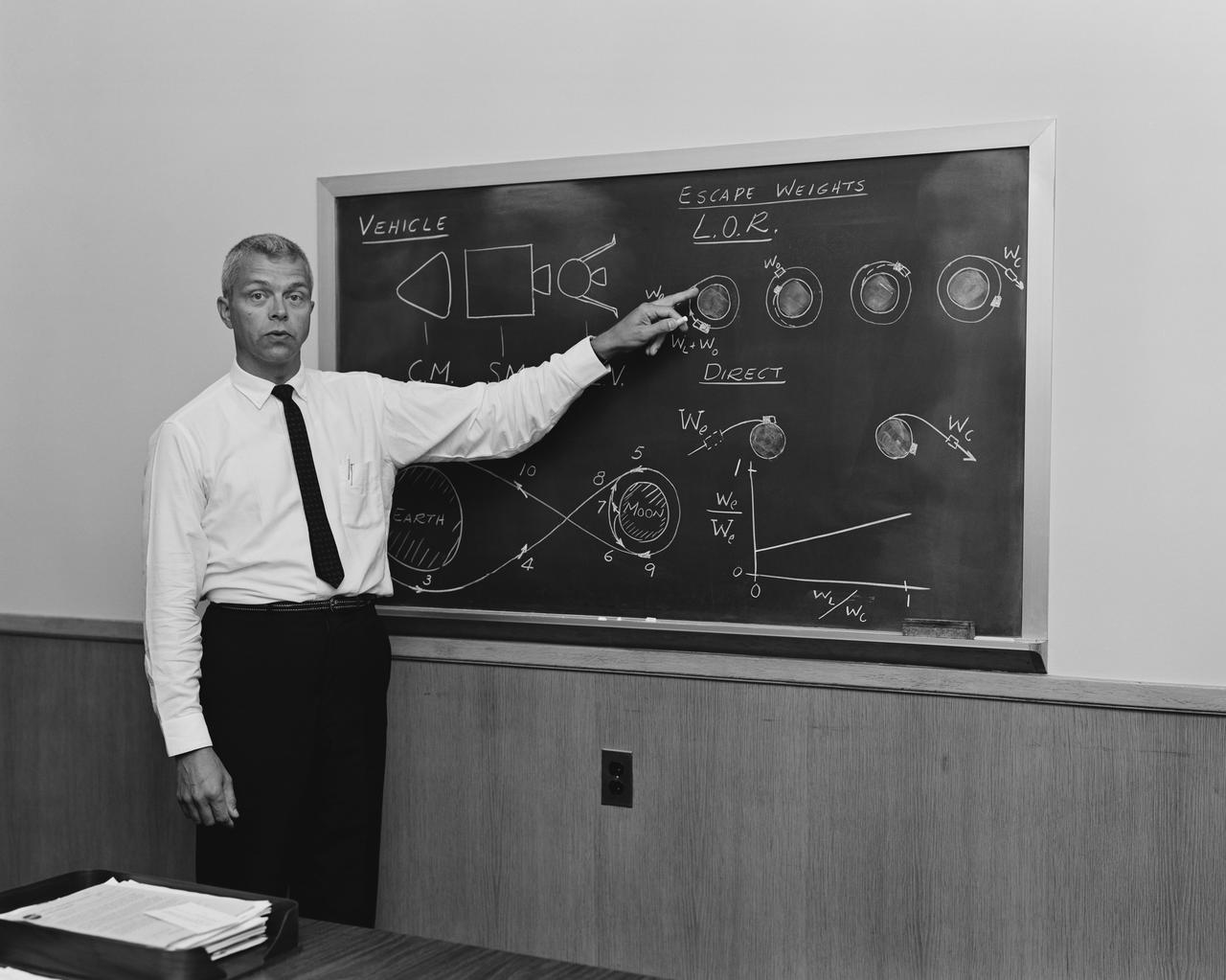

This Week in NASA History: 35th Anniversary of Apollo 15

A New Approach to the APPEL Curriculum

GAO Questions CEV Acquisition Plans

Trends in Project Management: The PMI Perspective

Beyond the Chain of Events: A New Model for Safety

National Research Council Assesses NASA’s Science Portfolio

Leadership Corner: Effective Presentations