By Mary Chiu

If you know anyone who’s been involved in building a spacecraft, I’m sure you’ve heard the mantra, “Test what you fly, and fly what you test.” Listen to a project manager from my institution [The Johns Hopkins Applied Physics Laboratory (APL)] talking in his or her sleep, and this is likely what you’re going to hear.

At APL, we do a lot of testing. We probably do more testing in the initial stages of a project than we could explain to review boards. Perhaps we are conservative in this respect, but our project managers and engineers believe in getting a good night’s sleep before a launch, and testing is a good way of ensuring that.

So you can imagine my reaction when the NASA project manager, Don Margolies, suggested that on the Advanced Composition Explorer (ACE) mission we pull all the instruments off the spacecraft after we had just completed the full range of environmental testing. This would allow the scientists to do a better job of calibrating their instruments. I remember the scene well because it haunted me for weeks afterwards. We had just come out of the last thermal vac test at Goddard, and one by one the instruments, nine in all, were pulled off and returned to their developers for more tests of their own.

After I picked myself up off the floor, I began to think about that other mantra we hear quite a bit in this industry: “The customer is always right.” In theory, maybe. To his credit, Don accepted the responsibility (in writing) for this action and did everything he could to make sure the instruments would be returned in time for us to reintegrate them — and to involve me in that process — but I don’t want to minimize the impact on my team. There were certainly a lot of late nights towards the end as instruments came in right on the wire, maybe even a little later than the wire. This is not something I would want to do again; however, I would if I had a customer who was reasonable and understood that it wasn’t just something he wanted, but something we must work through together.

Now contrast this with another situation that had occurred earlier in the project. A couple of months after the Critical Design Review (CDR), some people over in the NASA project office were saying, “Why not use a different data handling format? With all the really neat things being done on other spacecraft, why are we getting this ‘old-fashioned’ data handling system?”

For my team at APL, the ones who were going to build the spacecraft, this was no small matter. To change to a different data handling system at this point would have required a major restructuring of the spacecraft’s design. Understand: we were already proceeding along with fabrication, and major changes of this sort were not to be taken lightly.

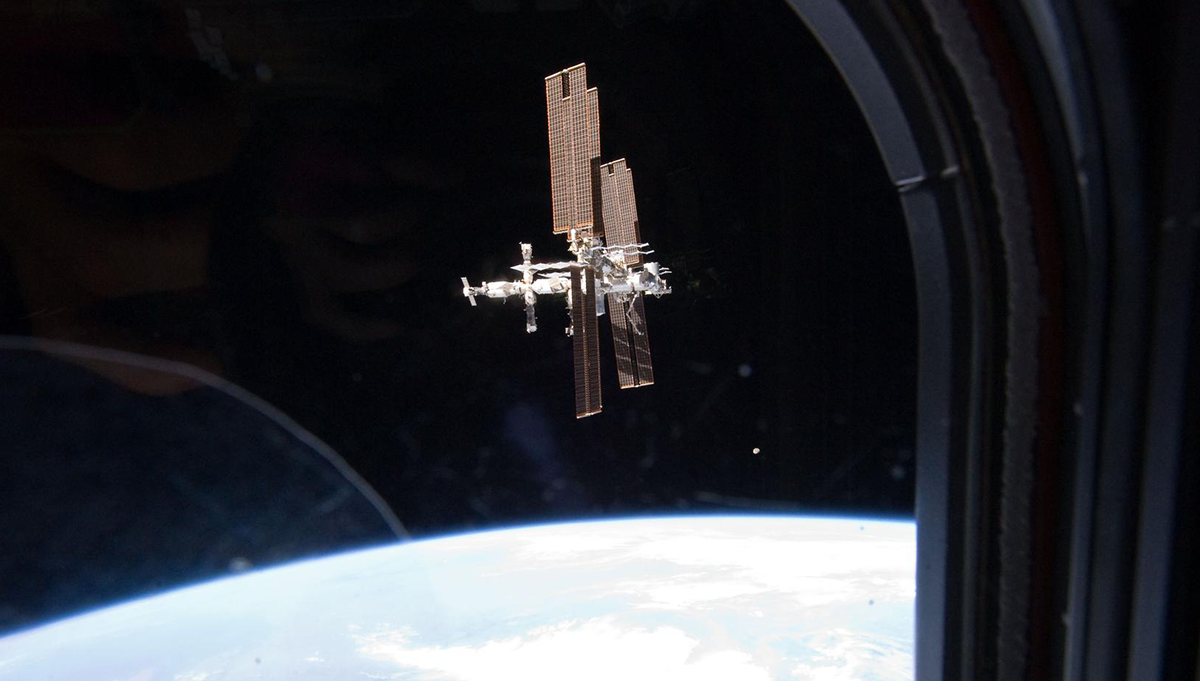

ACE was supposed to be a simple spacecraft, and that’s why we had decided on a simple, albeit “old-fashioned,” data handling system. Early on in the project, The John Hopkins Applied Physics Laboratory in Columbia, Maryland had responsibility for building the ACE spacecraft. Scientific instruments were mounted on the outside of the spacecraft, providing workers with easy access when they had to be removed following the integration and testing phase of the project. My lead engineer on the Data Handling System, Rich Conde, worked this out with the Principal Investigator, Dr. Edward Stone. Indeed, it was Dr. Stone’s decision to go with this type of data handling system. At one of the reviews, Rich said, “This is the most simplified approach, and this will be the most straightforward to develop and to test. Is this the way you want to go?” He then presented the options, and Dr. Stone said “keep it simple.” In fact, “keep it simple” became our mantra. We thought that was the end of the issue.

The Johns Hopkins Applied Physics Laboratory in Columbia, Maryland had responsibility for building the Advanced Composition Explorer (ACE) spacecraft. Scientific instruments were mounted on the outside of the spacecraft, providing workers with easy access when the instruments had to be removed following the integration and testing phase of the project.

When the project office at NASA says, “Why can’t you do this and not that?”, the last thing you want to do is ignore them. I got my leads together to formulate our position, and then I responded to the project office by writing a paper, explaining the ramifications of such a change. Well, apparently that wasn’t good enough. What they sent back to us we already knew. Newer data handling systems provide reprogrammability, meaning that if one instrument shuts down you can send more data to the other instruments, and isn’t that a good thing? Yes, of course it is; but the point I had to keep coming back to, the crux of the issue as far as I was concerned, was that we had not intended the system to be reprogrammable at the CDR.

We went round and round about that, and there was quite a bit of paper exchange. “Okay,” I said at last, “if you want to give us a change order, fine, I’ll give you the impact statement, and it will be in cost and schedule. If you still want to change from what was agreed on in the CDR, that’s fine too,” but I made clear that they couldn’t change requirements this radically and still maintain the original schedule.

This was probably my first real test as a project manager. I was new at this and I decided that I was not going to get tagged the first time out. Younger than normal for a project manager at APL, and also female — the first female project manager at APL on a project this big — I had sparked some concern in the project office as to whether I was up to the challenge. So I had something to prove too.

There were several comments intimating that the people on my team were not a “can-do” group. That upset many of us. Like any highly motivated team, we took pride in our work, and I had to negotiate with the group in making sure none of this unpleasantness escalated into something that might have a corrosive effect on the project. I spent time coaching people as to how they should behave: “Okay, you’re professionals and we know you are good,” I told them. “These are our customers, and we always have to be courteous. You still have to make yourself available to them. They will be here talking to our people. Questions get asked, and that’s only natural, but if questions start sounding more like directions, or why don’t you do this or why don’t you do that, very politely say, ‘Well, that’s an interesting idea, but let’s bring Mary into this and discuss it at the project level.'”

Naturally, you want to have open communication with the customer, but you also have to monitor how people respond to things. Overall, I think the team, myself included, became a lot better at approaching communications during this experience. What we did a better job of as time went by was to not just say “no”, but “no, because if you do this it will impact this, this, and this.” Once you explain things like that, rather than just flat-out saying “no”, you’re not as likely to hear the customer come back to you with “What do you mean you can’t do that? It seems like such an easy change to me.” Yes, we faced some awkward situations, but mostly the team did a fabulous job of addressing customer concerns.

One thing I learned on ACE is that you have to decide what is really worth putting your relationship with the customer in jeopardy over. There were times when I didn’t agree with what the customer wanted, but I was still going to do whatever I could to accommodate a customer request. The customer is always right — in theory — but nothing in a space flight program is ever a simple change, and it can have ramifications that you may not realize until later. Sometimes you have to point out to the customer that what is being asked for may not be in the best interest of the project.

Ultimately, we resolved to stick with the original data handling system, but there was quite a bit of unpleasantness during this time. Now, contrast this with what happened later in the project. In the case of reintegrating the instruments, although I disagreed with what we were asked to do, I was able to work with the customer on it because it was clear we must cooperate together. Don consulted with me, listened to my concerns, worked around my concerns, and in the end treated me as a partner. Now this was a much different experience than we’d had earlier in the project with the data handling system, and illustrates just how much two separate entities can accomplish in a spirit of cooperation.

Lessons

- The project manager should use the input of her leads to defend positions about project issues, but it is the project manager’s responsibility to speak to the project’s customer.

- Cooperation between stakeholders on a project is critical in resolving conflicts.

Question

Given that trust and openness are critical to a successful contractor-customer relationship, what do you do as the project manager for the contractor when you cannot develop a trusting relationship with your customer?

Search by lesson to find more on:

- Testing