By Marty Davis

About a year and a half ago, I sent all of my people — the support contractors and the civil servants alike — to risk management training. It was part of an ongoing commitment to manage risk effectively on my weather satellite program at the Goddard Space Flight Center.

They broke into groups for a full day of training, and then they all got together for a workshop to create a list of the risks we faced. When I came into the workshop, I told them that they were free to suggest any risk they wanted, but they needed to understand that our senior management team was going to review all the submissions to decide what was relevant.

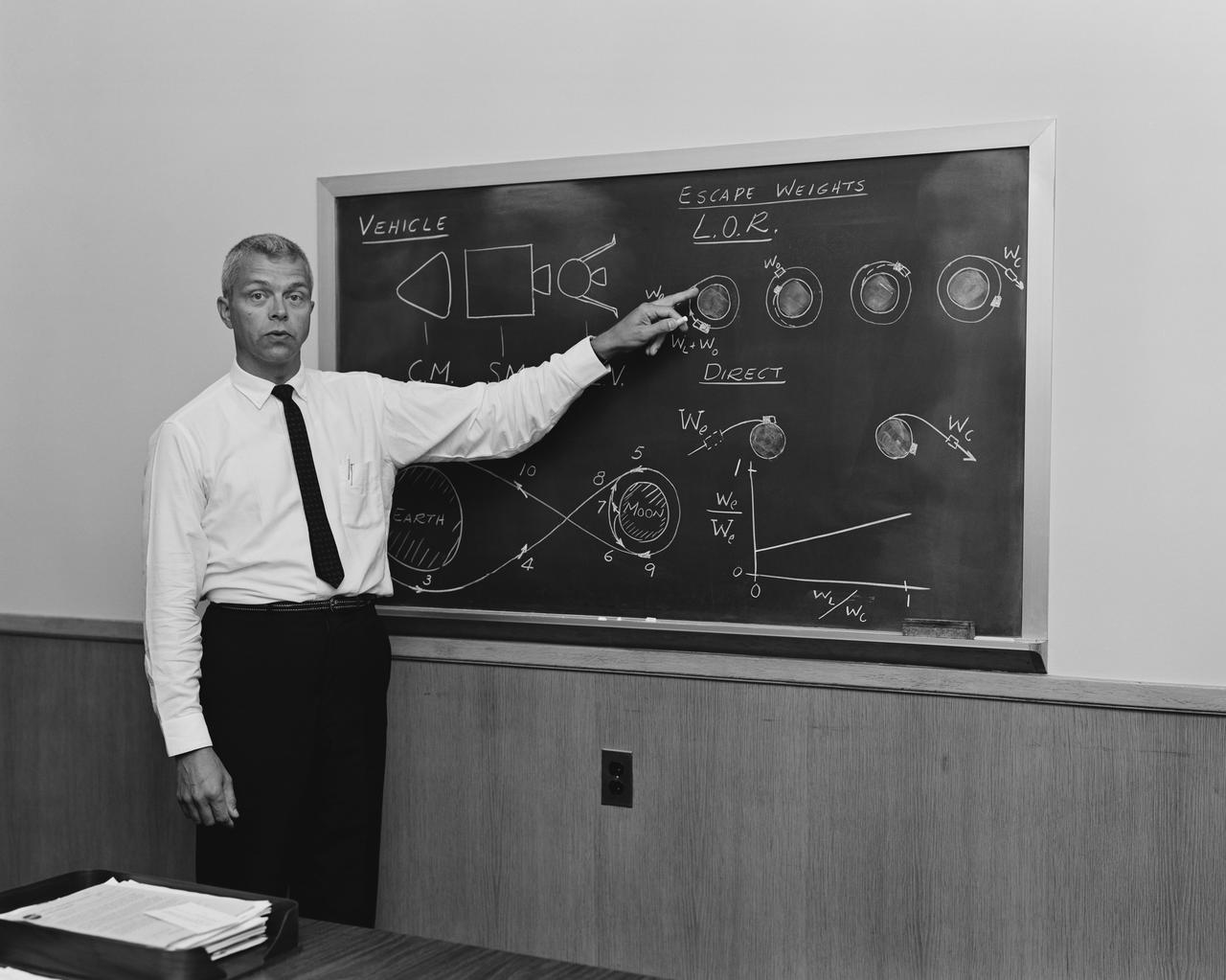

“Your imaginations could go wild,” I explained to them, “and you could generate hundreds of risks — that you get run over by a car, or other things like that. We can’t plan for situations like that. So don’t submit ridiculous things to us like, ‘We’re going to crush an instrument,’ or ‘We’re going to drop the spacecraft.’ Those just aren’t credible.”

At the end of the day, we collected all the risks they came up with, and we entered the credible ones into our system for tracking. We reviewed some of these risks every other week and revisited the entire list periodically. We were doing what we could to manage risk on the program — or so we thought.

You’re Not Going to Believe What Happened.

On September 5, 2003, my wife and I left to go on vacation. We planned to spend two weeks wandering around New York State seeing all the sights. When we left the house, I turned off my cell phone, but kept my pager on — in case anyone needed to get hold of me. We had a wonderful weekend. Then, bright and early on Monday morning, my pager went off. It was the Project Manager for one of our spacecraft. She had been trying to reach me on my cell phone since Saturday to tell me that the day after I left, Lockheed-Martin had dropped one of my spacecraft.

You can go through your whole career and never have someone drop one of your spacecraft. I think that would have been nice. So, one of the first things I did when I got back, was to inquire whether I could retire retroactively to Friday, so it wouldn’t have been on my watch. They just laughed that off.

Then we got to work. Almost immediately, four investigation teams were formed — two by Lockheed-Martin and two by NASA. Each was tasked to investigate a different aspect of the accident. These aspects included not only finding out what happened, but also looking for systemic problems in the program, determining next steps, and assessing liability.

What Went Wrong?

The “what happened” investigation didn’t take long to report its findings. To begin with, the procedure called for eleven people to be present for this operation. There were only six there. The Lockheed-Martin people had decided some time earlier that three of them weren’t really needed — but they had never redlined the procedure and notified us. The other three hadn’t been scheduled. The safety guy wasn’t even notified, even though he was listed in the procedure.

The operation was scheduled to begin at 6:00 a.m. They also should have had a NASA QA guy there, but when they called him, he said he’d be in later and to proceed without him. When the contractor’s QA person arrived at about 6:30 a.m., they were on step six of the procedure, and he said, “Oh, you’re on the sixth step. Let me sign off on the first five.” And he stamped them, without bothering to look at anything.

One of the procedure steps involved inspecting the cart to make sure it was ready to take the spacecraft. The test conductor said he used the cart a week ago; so what could have happened since then? He didn’t inspect it.

Then one of the technicians went over to the cart as they were lowering down the spacecraft. He told them something looked different, but the test conductor didn’t go over to look. He just said to go ahead. Turns out, there was a ring of bolts missing. That’s what looked different.

There were many steps bypassed that day, any one of which would have caught and avoided the problem. They ignored them all. They went on. They mounted the spacecraft. Then they went to turn it over on their dolly, and it hit the ground. A 3,000-pound spacecraft dropping three feet onto a concrete floor gets damaged. How damaged was a bit more complicated, but estimates ran up to $200 million.

Pointing Fingers

After the Mishap Investigation Board (MIB) draft report came out, the test conductor and two other people got fired. It was Lockheed-Martin’s response to show that they wouldn’t tolerate this kind of activity. The way I saw it, the people who got fired weren’t necessarily the people who should have been blamed, because they weren’t the root cause of the accident. I felt the blame really should have gone higher in the organization. The Project Manager was replaced six months later.

There were several MIB conclusions with which I took issue. For instance, they put some of the blame on the government, because we didn’t have our own QA person there at the time of the occurrence. I believe that I should have reviewed all of the procedures and to have made certain that things were in place for the contractor to do the work properly and safely — safely for the people, and safely for our equipment.

They suggested that we needed to have a civil servant in residence at a plant for every project like this. But I don’t think it matters what badge someone wears. He or she just needs to have the right dedication, the right training, the right experience, the right everything. Being civil service doesn’t mean a damn thing. I have actually used civil servant leads and contractor leads at one time or another in the past. Either will work as long as you have the right person in the right situation.

But I was told by my management, “You will implement everything that is in the report.” No discussion, no exceptions.

Around that same time, I got my copy of the Columbia Accident Investigation Board (CAIB) report. After reading it, I called my deputy center director. I said that the CAIB Report tells me not to blindly do things that I think are stupid. So, I said we needed to talk about the MIB report. He started to laugh. Then he said that he would have to think about that one.

So, we had a little standoff. Since that time, I have spoken with the chairman of our investigation board. I found out that the MIB team didn’t unanimously agree to the things that I had problems with. The next time they meet in Washington, as a complete team, I’m going to get to talk to them.

An Incredible Risk Repeated

A risk (dropping a spacecraft) that I had summarily dismissed as “not credible” at our risk management workshop actually has real-world precedence — both before and after our own event.

In mid-2000, another contractor, let’s call them Contractor-B, dropped a spacecraft. You didn’t hear too much about that, because it wasn’t a government contract; it was a commercial contract. They dropped it because of bolts that were missing in the dolly. (Sound familiar?) We knew they dropped it, but the details never came out.

The same Contractor-B dropped another spacecraft in the middle of December 2003. That made it into the Space News without much detail. They had just run a thermal vacuum test on the spacecraft in Seattle, Washington, and then dropped it while putting it back into the shipping container. Someone was hurt in that accident.

None of these are simple cases where a team missed one step and so the accident happened. It’s always a combination of skipped steps or miscommunications or dangerous assumptions. So, how do we mitigate this sort of risk?

First, we need to properly identify the risk. In our case and the two I sited above, the real risk wasn’t necessarily “dropping the spacecraft,” even though that was the end result. The risk in our case would more accurately be called “complacency.”

We had a long-term project with our contractor. Their attitude was that a spacecraft lift was not a risky thing. After years of doing this work, they saw it as very low-risk. But, in truth, it’s always a hazardous operation. It should never be considered low-risk. It always requires the full attention they gave it the first time they did it.

I’ve come to realize that, no matter how long you work in this business, new experiences will keep coming along. Each one broadens your horizon and helps you do better.

Lessons

- Safety requires strict adherence to procedures. Period!

- However, adherence to procedures in repeated operations also requires the careful attitude typical of “first-timers.”

Question

To what extent is adherence to procedures — coupled with the right attitude, but unsupported by the proper experienced-based judgment — sufficient to prevent known risks, but insufficient in preventing the unknowns?

Search by lesson to find more on:

- Risk