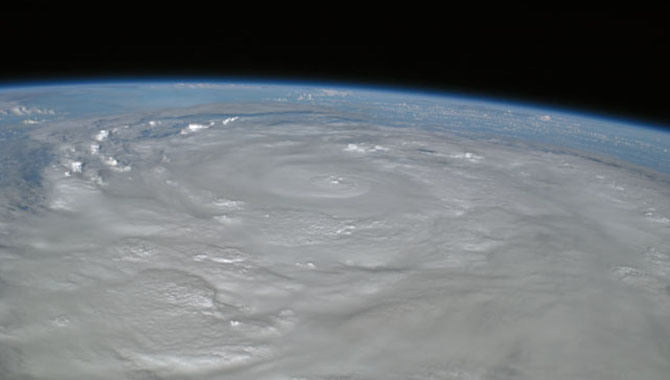

Picture of Hurricane Ike taken by the crew of the International Space Station flying 220 statute miles above Earth.

Photo Credit: NASA

May 10, 2011 Vol. 4, Issue 3

Operating the International Space Station under normal circumstances is challenging. Doing it during the third costliest hurricane to hit the United States is another story.

Natural disasters here on earth are usually not the first performance-threatening obstacles to space exploration missions—budgets and technical problems are more frequent show-stoppers. On September 9, 2008, when Hurricane Ike was headed for Houston, it had been at least two decades since the last big storm hit Johnson Space Center (JSC). Ike was the third storm in four weeks to trigger an emergency response, compelling hurricane-fatigued area residents to evacuate or buckle down to ride out the storm. The last concern for most local residents was the International Space Station (ISS).

That was not the case at JSC, which was busy with operations and preparations for ongoing and future missions. NASA astronaut Greg Chamitoff was aboard the ISS on Expedition 17 with two cosmonauts, Progress and Soyuz vehicles were scheduled to dock and undock from the ISS in early September, and STS-125 was slated to launch October 8 for the final servicing mission of the Hubble Space Telescope.

Before Ike made landfall, a “Rideout” team was in place to maintain necessary operations within JSC, which includes Mission Control Center (MCC) for ISS. This meant maintaining vital servers and coordinating station operations. NASA Flight Directors Heather Rarick and Courtenay McMillan, along with their teams, were tasked with sustaining ISS operations from remote locations throughout the storm.

A Four-Week Dress Rehearsal

Despite regularly rehearsing emergency response plans, chances to execute and learn from them are few and far between. Events like September 11, 2001, and Hurricane Lili in 2002 drove the development of improved backup plans in the event that ISS operations were jeopardized.

NASA mitigates the risk of losing ISS command and control in Houston through redundancy. A smaller version of mission control in Moscow serves as one backup, though its capability is limited by the use of ground-based satellites, which can only transmit data when the ISS flies over their antennae. The Backup Control Center (BCC) at Marshall Space Flight Center provides more functionality today, but it was still in the process of being configured in May 2008. Even with two backups, JSC seeks to avoid losing capability through Houston. “Once you swing away from Houston,” said Rarick, “it takes a long time and effort to swing back.”

Enter the BCC Advisory Team (BAT), a mobile squad dispatched to undisclosed locations to carry out ISS operations. This team can quickly provide command and control capability if MCC is unable to do so. It was dispatched when Ike started on its path straight for Houston (twelve days after Hurricane Gustav, which arrived twenty days after tropical storm Edouard). In addition, McMillan flew to Marshall Space Flight Center (MSFC) to lead the BCC team. Rarick joined BAT outside of Austin, Texas, to provide plans and data that were already in progress to support the current astronaut team on ISS (Expedition 17) and overall ISS systems status and plans. “Once BAT is operational, then we just sit and wait until all MCC operations in Houston are handed over to BAT,” said Rarick.

No, no! Dont shut that one down.

BAT set up their mobile mission control in a small hotel conference room. Two digital clocks, labeled “GMT” and “CST” with yellow Post-It notes, were at the front of the room. Outside, parents, children, and pets lined the hotel halls, seeking refuge from the storm. None would have guessed what this team was doing.

Picture of Hurricane Ike taken by the crew of the International Space Station flying 220 statute miles above Earth.Credit: NASA

The first half hour of every morning was rough. “At 8:00 a.m., the hotel guests would get up and check their email, check the Internet, and then we’d drop off (lose the connection),” said Rarick, referring to the effects caused by the short surge in online traffic at the hotel. “We would have to reestablish our Internet link, and we’d be fine most of the day.”

Maintaining connectivity proved to be a challenge. Although BAT had a backup—using the BCC team at MSFC—the international partners didn’t. Computers in Houston were essential to providing command and control from the international partners to ISS. This was a major reason to keep Houston up and running for as long as possible. McMillan made constant calls to the hurricane Rideout team about the status of various computers and servers. Some had to be covered with plastic wrap; others had to be shut down entirely because of water leaks in the roof. Whenever Rideout delivered updates about equipment that had to be taken offline, McMillan recalled, “We’d think, “No, no! Not that one.”

Progress on a Schedule

As Ike approached Houston, BAT had gone west, BCC team had gone east, and a Russian Progress vehicle had launched. Progress began its journey to the station on September 10. Ike’s timing was less than ideal.

Docking a Progress spacecraft to the ISS is a critical operation that involves conducting thermal analysis and reorienting the solar arrays, among other things. The ISS flies at an inclination of 51.6 degrees, which is a tough environment in terms of thermal conditions. Certain changes in temperature can cause structures like the solar array longerons (long, sturdy rods that support the arrays) and equipment positioned outside the ISS to expand or contract. “We go through larger hot and cold periods than we originally planned for some space station hardware,” explained Rarick. “So when we have to configure for a docking, we have to do thermal analysis.” This thermal analysis has to be done on a specific Houston computer.

To obtain the details needed, the thermal analysis team had to get creative. In order to communicate, the team had to relocate to an out-of-the-way coffee shop to get a Wi-Fi connection. “We had to send them the information needed to run the analysis back in a deserted office,” said Rarick. “They would get the computer up and running, do all the analysis, and tell us if the plan was thermally acceptable.”

Additionally, a Progress vehicle approaches the ISS in such a way that its thrusters can damage the solar arrays if left unmoved. Reorienting the solar arrays usually decreases the amount of energy they can acquire, which means instituting energy management procedures. Mission control powers down certain modules to conserve energy prior to an event. It is a complex maneuver, explained Rarick. “One loss of one computer and we can’t put our solar arrays in the right position.”

“For these events we always have full redundancy.” But the BAT did not have the necessary redundancy in its systems. BCC didn’t either, but it had some redundancies that BAT didn’t. BAT handed over control to McMillan. “Realistically, we were going to get into the situation eventually,” said McMillan about the Progress docking. “The fact that we got into this situation right out of the gate took a lot of us by surprise.”

Space Station Aside

While station operations, computer servers, and buildings comprise one part of the emergency response plan, taking care of families, relatives, employees, children, and pets is the other. “Getting your house ready is no easy task,” said Rarick. “Literally, you go through your house and say, ‘What do I care about?’”

Evacuation isn’t easy. Aside from two minor freeways, I-45 is the one and only major freeway leading out of Houston. “Pick the wrong way, and you’re still in the hurricane,” said Rarick. “People get hurt, pets get lost, homes are destroyed, valuables are lost.”

Most of all, Rarick and McMillan appreciated having information. “All of us were just glued watching the news, trying to figure out what was going on,” recalled McMillan. “After Ike’s landfall, I was incredibly impressed by how the management team, not just the management, but the team as a whole back in Houston pulled together to get information and help each other out.”

Volunteer crews deployed around the community to clear driveways, cut down tree debris, share generators, or visit homes to send status reports back to families who couldn’t return yet. Some areas didn’t get power back for weeks. Stagnant water provided a breeding ground for mosquitoes. Dead animals had to be removed. Homes had to be salvaged, and communities rebuilt.

“There was a huge effort, and it was very well organized. NASA management teams put volunteers on teams, called you, and told you where to show up and what to do,” said Rarick. “It was significant. Those of us who were unable to return home were well taken care of.”

Ready for the Next Time Around

Despite the havoc Ike wreaked, JSC received praised for its response. “The JSC team did an outstanding job of preparing prior to the storm and recovery afterwards – through these difficult experiences our collective knowledge was expanded,” wrote Mike Coats, center director of JSC, in a lessons learned report

on Ike. “Most of the stuff that became lessons learned were holes that we didn’t anticipate or didn’t fully understand,” said Rarick. “Not because of a lack of preparation.”

One of the biggest lessons Ike brought to light was orchestrating center preparedness. Starting with Level 5 (the beginning of hurricane season in May) and ending with Level 1 (the hurricane has arrived), JSC choreographed all the preparation of all the center’s assets. However, while preparedness levels have predetermined schedules to them, hurricanes don’t.

Mission Control has a large stack of evacuation checklists. “Everyone pulls out the procedure, we walk through them, and we track when they are done.” They are systematic and vigilant with these checklists, Rarick explained. A problem arises when the predetermined level says it takes 24 to 38 hours to complete, but the storm changed pace and instead there are only four hours. Said Rarick, “You have an expectation and you go into work one day and you think ‘OK, we’re on Level 4. How do we get to a Level 3 late today or tomorrow?’ Suddenly, it’s late afternoon and JSC is at Level 3, but MCC isn’t.”

“We spent a lot of time starting in early 2008 to really go through those procedures with the new (BCC) capabilities in mind to try and figure out what was the best way to choreograph all of that,” added McMillan. “We had done that previously when we just had BAT,” said McMillan. Even then there were things they didn’t foresee. “After Ike, we went back and made some changes to the procedures because of things that we had learned,” said McMillan.

Preparations like pre-storm covering of electronic equipment and mitigation after Ike’s passage were vital in prevention of major damage at Johnson Space Center. Here workers are in the center’s Mission Evaluation Room after the storm. Credit: NASA

Other lessons ranged from IT and connectivity issues to maintaining employee contact information and making sure there was enough staffing. “When Ike came around, the good news was we had done this in terms of actually putting people in place,” said McMillan. A team was dispatched for one night during Gustav, which diverted to Louisiana instead. The bad news, she continued, was that the “one-night Gustav” mentality was still present when Ike hit—the BCC team was dispatched for over a week, supporting operations around the clock, and shift backup couldn’t come fast enough.

While procedures for center shutdown are practiced annually, aftermath recovery was not as well developed. Tracking down the right personnel to access specific systems for contracts, funding, and procurement needed for center recovery and rehabilitation was a challenge. “It’s difficult to plan for the multitude of outcomes,” said Rarick.

Being adaptable and maintaining a global view of the situation was difficult but essential to everyone involved. “The exchanges that we had with the center ops folks were really interesting,” said McMillan. “They really had to think about what type of information they needed to convey to center ops in order for it to mean something in terms of evacuation readiness. Those of us in space station aren’t used to having to think about things like the team that’s working on the roof of whichever building. Meanwhile, the center ops folks are not used to worrying about whether or not the right server is up to support a Progress docking. There were a lot of conversations where we ended up looking across the table at each other saying, ‘Huh?’”

“Even though we had set up a plan and prepared for everything, it was the ability to make changes at the last minute, or accommodate whatever narrow situation you were in to find a way through it that made it successful,” said Rarick.

Read the full Hurricane Ike lessons learned report.