By Kerry Ellis

Navigating to alien planets similar to our own is a universal theme of science fiction. But how do our space heroes know where to find those planets?

Kepler-20e is the first planet smaller than Earth discovered to orbit a star other than the sun. A year on Kepler-20e lasts only six days, as it is much closer to its host star than Earth is to the sun. The temperature at the surface of the planet, around 1,400ºF, is much too hot to support life as we know it.

Image Credit: NASA/Ames/JPL-Caltech

And how do they know they won’t suffocate as soon as they beam down to the surface? Discovering these Earth-like planets has taken a step out of the science fiction realm with NASA’s Kepler mission, which seeks to find planets within the Goldilocks zone of other stars: not too close (and hot), not too far (and freezing), but just right for potentially supporting life. While Kepler is only the first step on a long road of future missions that will tell us more about these extrasolar planets, or exoplanets, its own journey to launch took more than twenty years and lots of perseverance.

Looking for planets hundreds of light-years away is tricky. The stars are very big and bright, the planets very small and faint. Locating them requires staring at stars for a long time in hopes of everything aligning just right so we can witness a planet’s transit—that is, its passage in front of its star, which obscures a tiny fraction of the star’s light. Measuring that dip in light is how the Kepler mission determines a planet’s size.

The idea of using transits to detect extrasolar planets was first published in 1971 by computer scientist Frank Rosenblatt. Kepler’s principal investigator, William Borucki, expanded on that idea in 1984 with Audrey Summers, proposing that transits could be detected using high-precision photometry. The next sixteen years were spent proving to others—and to NASA—that this idea could work.

Proving Space Science on the Ground

To understand how precise “high-precision” needed to be for Kepler, think of Earth-size planets transiting stars similar to our sun, but light-years away. Such a transit would cause a dip in the stars visible light by only 84 parts per million (ppm). In other words, Kepler’s detectors would have to reliably measure changes of 0.01 percent.

Borucki and his team discussed the development of a high-precision photometer during a workshop in 1987, sponsored by Ames Research Center and the National Institute of Standards and Technology, and then built and tested several prototypes.

When NASA created the Discovery Program in 1992, the team proposed their concept as FRESIP, the Frequency of Earth-Size Inner Planets. While the science was highly rated, the proposal was rejected because the technology needed to achieve it wasn’t believed to exist. When the first Discovery announcement of opportunity arose in 1994, the team again proposed FRESIP, this time as a full mission in a Lagrange orbit.

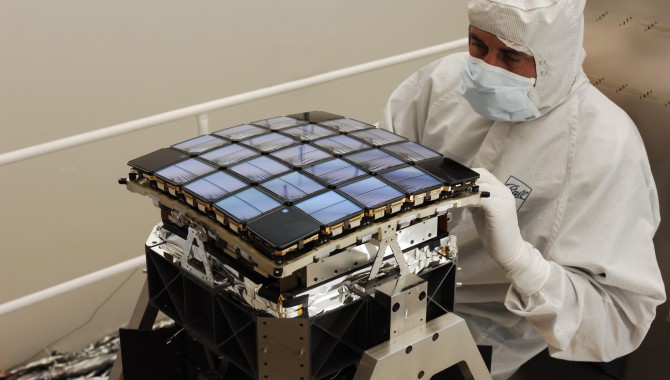

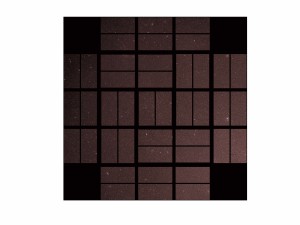

Kepler’s focal plane consists of an array of forty-two charge-coupled devices (CCDs). Each CCD is 2.8 cm by 3.0 cm with 1,024 by 1,100 pixels. The entire focal plane contains 95 megapixels.

Photo Credit: NASA and Ball Aerospace

This particular orbit between Earth and the sun is relatively stable due to the balancing gravitational pulls of Earth and the sun. Since it isn’t perfectly stable, though, missions in this orbit require rocket engines and fuel to make slight adjustments—both of which can get expensive. Reviewers again rejected the proposal, this time because they estimated the mission cost to exceed the Discovery cost cap.

The team proposed again in 1996. “To reduce costs, the project manager changed the orbit to heliocentric to eliminate the rocket motors and fuel, and then cost out the design using three different methods. This time the reviewers didn’t dispute the estimate,” Borucki explained. “Also at this time, team members like Carl Sagan, Jill Tarter, and Dave Koch strong-armed me into changing the name from FRESIP to Kepler,” he recalled with a laugh.

The previous year, the team tested charge-coupled device (CCD) detectors at Lick Observatory, and Borucki and his colleagues published results in 1995 that confirmed CCDs—combined with a mathematical correction of systematic errors—had the 10-ppm precision needed to detect Earth-size planets.

But Kepler was rejected again because no one believed that high-precision photometry could be automated for thousands of stars. “People did photometry one star at a time. The data analysis wasn’t done in automated fashion, either. You did it by hand,” explained Borucki. “The reviewers rejected it and said, ‘Go build an observatory and show us it can be done.’ So we did.”

They built an automated photometer at Lick Observatory and radio linked the data back to Ames, where computer programs handled the analysis. The team published their results and prepared for the next Discovery announcement of opportunity in 1998. “This time they accepted our science, detector capability, and automated photometry, but rejected the proposal because we did not prove we could get the required precision in the presence of on-orbit noise, such as pointing jitter and stellar variability. We had to prove in a lab that we could detect Earthsize transits in the presence of the expected noise,” said Borucki.

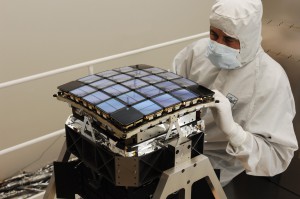

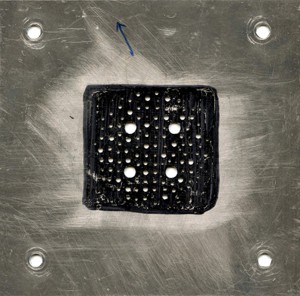

This star plate is an important Kepler relic. It was used in the first laboratory experiments to determine whether charge-coupled devices could produce very precise differential photometry.

Photo Credit: NASA/Kepler Team

The team couldn’t prove it using ground-based telescope observations of stars because the atmosphere itself introduces too much noise. Instead, they developed a test facility to simulate stars and transits in the presence of pointing jitter. A thin metal plate with holes representing stars was illuminated from below, and a prototype photometer viewed the light from the artificial stars while it was vibrated to simulate spacecraft jitter.

The plate had many laser-drilled holes with a range of sizes to simulate the appropriate range of brightness in stars. To study the effects of saturation (very bright stars) and close-together stars, some holes were drilled large enough to cause pixel saturation and some close enough to nearly overlap the images.

“To prove we could reliably detect a brightness change of 84 ppm, we needed a method to reduce the light by that amount. If a piece of glass is slid over a hole, the glass will reduce the flux by 8 percent—about one thousand times too much,” Borucki explained. “Adding antireflection coatings helped by a factor of sixteen, but the reduction was still sixty times too large. How do you make the light change by 0.01 percent?

“There really wasn’t anything that could do the job for us, so we had to invent something,” said Borucki. “Dave Koch realized that if you put a fine wire across an aperture—one of the drilled holes—it would block a small amount of light. When a tiny current is run through the wire, it expands and blocks slightly more light. Very clever. But it didn’t work.”

The science merit function that Bill developed was a bridge between the science and engineering that we used in doing these kind of trade studies…

With a current, the wire not only expanded, it also curved. As it curved, it moved away from the center of a hole, thereby allowing more light to come through, not less.

“So Dave had square holes drilled,”” said Borucki. With a square hole, when the wire moves off center, it doesn’t change the amount of light. To keep the wire from bending, we flattened it.” The results demonstrated that transits could be detected at the precision needed even in the presence of on-orbit noise.

After revising, testing, publishing, and proposing for nearly twenty years, Kepler was finally approved as a Discovery mission in 2001.

Engineering Challenges

After Kepler officially became a NASA mission, Riley Duren from the Jet Propulsion Laboratory joined the team as project systems engineer, and later became chief engineer. To help ensure a smooth progression, Duren and Borucki set out to create a common understanding of the scientific and engineering trade-offs.

“One of the things I started early with Bill and continued throughout the project was to make sure that I was in sync with him every step of the way, because, after all, the reason were building the mission is to meet the objectives of the science team,” said Duren. “It was important to develop an appreciation for the science given the many complex factors affecting Kepler mission performance, so early on I made a point of going to every science team meeting that Bill organized so I could hear and learn from the science team.”

The result was something they called the science merit function: a model of the science sensitivity of mission features—the effects on the science of various capabilities and choices. Science sensitivities for Kepler included mission duration, how many stars would be observed, the precision of the photometers light measurements, and how many breaks for data downlinks could be afforded. Bill created a model that allowed us to communicate very quickly the sensitivity of the science to the mission, explained Duren, and this became a key tool for us in the years that followed.

The science merit function helped the team determine the best course of action when making design trade-offs or descope decisions. One trade-off involved the telecommunications systems. Kepler’s orbit is necessary to provide the stability needed to stare continuously at the same patch of sky, but it puts the observatory far enough away from Earth that its telecommunications systems need to be very robust. The original plan included a high-gain antenna that would deploy on a boom and point toward Earth, transmitting data without interrupting observations. When costs needed to be cut later on, descoping the antenna offered a way to save millions. But this would mean turning the entire spacecraft to downlink data, interrupting observations.

A single Kepler science module with two CCDs and a single field-flattening lens mounted onto an Invar carrier. Each of the twenty-one CCD science modules are covered with lenses of sapphire. The lenses flatten the field of view to a flat plane for best focus.

Photo Credit: NASA/Kepler Mission

“Because were looking for transits that could happen any time, it wasn’t feasible to rotate the spacecraft to downlink every day. It would have had a huge impact on the science,” Duren explained. So the team had to determine how frequently it could be done, how much science observation time could be lost, and how long it would take to put Kepler back into its correct orientation. We concluded we could afford to do that about once a month,” said Duren. Since the data would be held on the spacecraft longer, the recorder that stored the data had to be improved, which would increase its cost even as the mission decreased cost by eliminating the highgain antenna.

“The science merit function that Bill developed was a bridge between the science and engineering that we used in doing these kind of trade studies,” said Duren. “In my opinion, the Kepler mission was pretty unique in having such a thing. And that’s a lesson learned that Ive tried to apply to other missions in recent years.”

The tool came in handy as Kepler navigated through other engineering challenges, ensuring the mission could look at enough stars simultaneously for long periods of time, all the while accommodating the natural noise that comes from long exposures, spacecraft jitter in orbit, and instrumentation. This meant Kepler had to have a wide enough field of view, low-noise detectors, a large aperture to gather enough light, and very stable pointing. Each presented its own challenges.

Kepler’s field of view is nearly 35,000 times larger than Hubble’s. Its like a very large wide-angle lens on a camera and requires a large number of detectors to see all the stars in that field of view.

Ball Aerospace built an instrument that could accommodate about 95 million pixels—essentially a 95-megapixel camera. “It’s quite a bit bigger than any camera you’d want to carry around under your arm,” Duren said. “The focal plane and electronics for this camera were custom built to meet Kepler’s unique science objectives. The entire camera assembly resides inside the Kepler telescope, so a major factor was managing the power and heat generated by the electronics to keep the CCD detectors and optics cold.”

This image from Kepler shows the telescope’s full field of view—an expansive star-rich patch of sky in the constellations Cygnus and Lyra stretching across 100 square degrees, or the equivalent of two side-by-side dips of the Big Dipper.

Image Credit: NASA/Ames/JPL-Caltech

What might be surprising is that for all that precision, Kepler’s star images are not sharp. “Most telescopes are designed to provide the sharpest possible focus for crisp images, but doing that for Kepler would have made it very sensitive to pointing jitter and to pixel saturation,” explained Duren. “That would be a problem even with our precision pointing control. But of course there’s a trade-off: if you make the star images too large [less sharp], each star image would cover such a large area of the sky that light from other stars would be mixed into the target star signal, which could cause confusion and additional noise. It was a careful balancing act.”

And its been working beautifully.

Extended Mission

Kepler launched successfully in 2009. After taking several images with its “lens cap” on to calculate the exact noise in the system, the observatory began its long stare at the Cygnus-Lyra region of the Milky Way. By June 2012, it had confirmed the existence of seventy-four planets and identified more than two thousand planet candidates for further observation. And earlier in the year, NASA approved it for an extended mission—to 2016.

“The Kepler science results are essentially a galactic census of the Milky Way. And it represents the first family portrait, if you will, of what solar systems look like,” said Duren.

Kepler’s results will be important in guiding the next generation of exoplanet missions. Borucki explained, “We all know this mission will tell us the frequency of Earth-size planets in the habitable zone, but what we want to know is the atmospheres of these planets. Kepler is providing the information needed to design those future missions.”

Related Links

- Kepler Mission Site

- Kepler Twitter Feed

- Planet Candidates