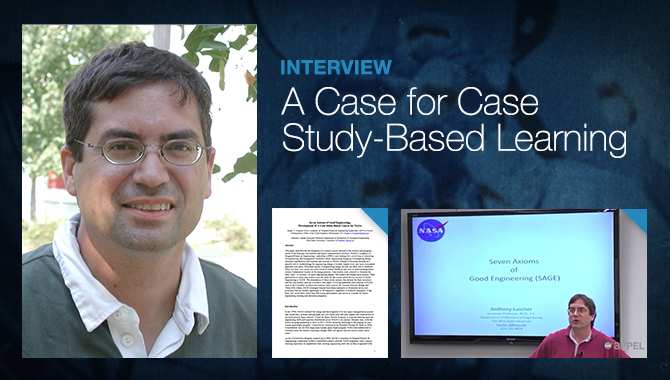

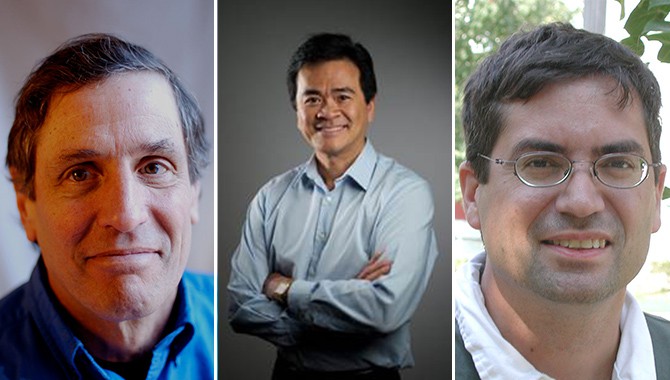

Author Don Cohen (left), Orbiting Carbon Observatory-2 project manager Ralph Basilio (center), and APPEL instructor Anthony Luscher (right) were among the speakers at Knowledge 2020 2.0.

What do some of the greatest engineering innovations have in common? They started with a mistake.

NASA’s Chief Knowledge Officer recently hosted a gathering of knowledge management experts from across the agency and private industry. The three days of Knowledge 2020 2.0 served as a forum for benchmarking, a resource for problem solving, and a foundation for network development to enhance sharing of critical lessons across organizations.

One theme that emerged during formal presentations as well as informal conversations was the value of admitting and analyzing mistakes. Don Cohen, co-author of In Good Company: How Social Capital Makes Organizations Work, identified two kinds of error: “screw-ups” (the often careless mistakes that dog complex projects) and “exploration failures” (the inevitable instructive missteps on the path to something new). Both are important sources of learning.

A key issue is whether an organization recognizes the role that acknowledging failure can play in paving the way toward success or creates an environment that inhibits individuals from owning up to their mistakes. “Organizations that accept failure as a fact of life are in a position to incorporate the learnings that mistakes provide,” said Cohen. This is crucial because unacknowledged mistakes can potentially endanger a project.

Organizations that learn from their mistakes share certain characteristics. They empower employees to admit errors without fearing reprimand or even job loss and conduct no-fault analyses in which the focus is on the process, not the individual. Employee trust, strong working relationships, and a shared mission—the sense that they are working together toward a meaningful goal—are important indicators of an organization that is able to learn from failure in a positive way.

“NASA exemplifies many of these characteristics,” said Cohen, but cautioned that the common refrain of “failure is not an option” should be balanced by an open attitude toward learning from setbacks.

One NASA project that embraced the “failure is not an option” approach in an overarching sense yet raised the bar for leveraging its own lessons is the Orbiting Carbon Observatory (OCO)-2. Project Manager Ralph Basilio shared his experienced at Knowledge 2020 2.0.

“You have to be willing to risk the lowest lows in order to achieve the highest highs,” he said. Over the past decade-plus, his project has experienced both. In 2009, the team launched the original OCO, which was designed to make precise, time-dependent global measurements of atmospheric carbon dioxide to help scientists better understand the sources and “sinks” of the greenhouse gas. When the payload fairing failed to separate moments after launch, the spacecraft and its instruments ended up in the ocean near Antarctica.

The team was given a rare chance to rebuild. With that came a unique opportunity to actively apply lessons learned and knowledge from OCO to maximize the potential for success with OCO-2. They painstakingly gathered input from everyone involved to ensure their lessons learned document was actionable. Ultimately, 78 lessons were identified for implementation. Three, in particular, acknowledged and corrected for errors in the original OCO instrument. The team identified the problems without pointing fingers, examined the errors to determine appropriate solutions, and applied their learnings.

Dr. Anthony Luscher, APPEL instructor and Associate Professor at The Ohio State University, also emphasized the value of mistakes in a learning context.

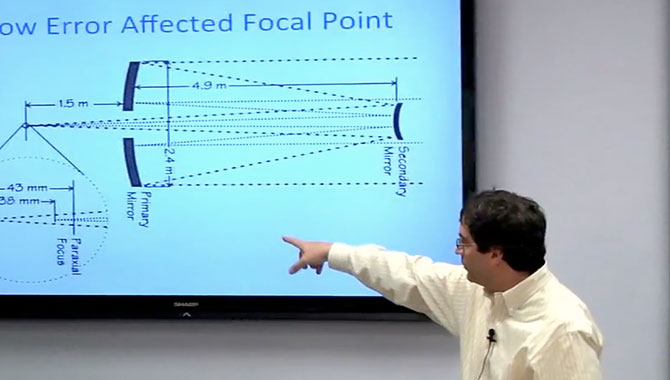

“In engineering, you can’t get anything done without risk,” said Luscher. To manage the risk, engineers need to maintain archival knowledge: awareness of key lessons learned on projects done previously—some even 100 years earlier—in order to avoid repeating mistakes.

In his APPEL course, Seven Axioms of Good Engineering (SAGE), Luscher employed both positive case studies—those with desired outcomes—and negatives ones. “Originally I wanted to include an even balance of positive and negative case studies,” he said. “But people learn from failure.” As a result, case studies in which mistakes lead to catastrophic events are particularly powerful learning tools.

“An organization committed to learning from failure enhances its potential for success,” said Cohen. The key is to uncover mistakes as quickly as possible to identify issues and address them on a procedural, not personal, basis. Through its knowledge services, APPEL courses, forums such as Knowledge 2020 2.0, and other internal resources, NASA remains committed to ensuring its workforce recognizes and retains the lessons learned by the agency over the decades. In this way failure, inevitably a fact in complex, ambitious work, is never without value.

Learn more about knowledge management and resources at NASA.

Read the APPEL case study OCO-2: A Second Chance to Fly.

Read Seven Axioms of Good Engineering: Development of a Case Study-Based Course for NASA.