NASA’s Michelle Munk, Ashley Korzun and Eric Nielsen discuss the impact of state-of-the-art computing on future NASA missions.

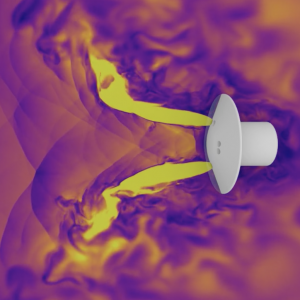

High-performance computing plays a key role in NASA’s preparation for human missions to Mars. A team of agency scientists and engineers is using the Department of Energy Oak Ridge National Laboratory’s Summit, the nation’s most powerful supercomputer, to simulate retropropulsion, or engine-powered deceleration, for landing humans on Mars.

In this episode of Small Steps, Giant Leaps, you’ll learn about:

- A significant shift in how to approach computational modeling and simulation of problems

- How NASA is using supercomputing technology to tackle mission challenges

- Engineering benefits of giant steps forward in simulation fidelity

Related Resources

Summit simulates how humans will ‘brake’ during Mars landing

Episode 45: Digital Transformation

APPEL Courses:

Creativity and Innovation (APPEL-vC&I)

Foundations of Aerospace at NASA (APPEL-vFOU)

Human Spaceflight and Mission Design (APPEL-vHSMD)

Manned Mission & System: Design Lab (APPEL-vMMSD)

Ashley Korzun

Credit: NASA

Ashley Korzun joined NASA Langley Research Center in 2012 as a research aerospace engineer. Her primary specialization is in entry, descent and landing aerosciences and systems with an emphasis on technology development for propulsive descent and landing on the Earth, Moon and Mars. Korzun is currently the Deputy Principal Investigator for the Entry Systems Modeling Project and the Deputy Principal Investigator for the Plume-Surface Interaction. She holds a bachelor’s in aerospace engineering from the University of Maryland – College Park and a master’s and doctorate in aerospace engineering from Georgia Tech.

Michelle Munk

Credit: NASA

Michelle Munk currently serves as NASA’s Entry, Descent and Landing (EDL) Principal Technologist and System Capability Lead. In that role since 2013, Munk is responsible for identifying, prioritizing, planning, advocating and guiding the agency’s technology developments that enable future spacecraft to fly through all planetary atmospheres and land safely and more precisely. She has fulfilled a variety of roles within the EDL field, including Deputy Project Manager and Subsystem Lead for the Mars Science Laboratory Entry, Descent and Landing Instrumentation suite, which entered the Mars atmosphere and returned high-quality heatshield data in 2012. Munk began her career as a trajectory analyst for human Mars missions at NASA Johnson Space Center in 1991 and has since worked for four different NASA centers. She was a cooperative education student at Virginia Tech, where she earned a bachelor’s in aerospace engineering.

Eric Nielsen

Credit: NASA

Eric Nielsen is a Senior Research Scientist with the Computational AeroSciences Branch at NASA Langley Research Center, where he has worked for the past 28 years. Nielsen specializes in the development of computational aerodynamics software for the world’s most powerful computer systems. The software has been distributed to thousands of organizations around the country and supports major national research and engineering efforts at NASA and in industry, academia, the Department of Defense and other government agencies. Nielsen has published extensively on the subject and has given presentations around the world on his work. He has served as the Principal Investigator on agency-level projects at NASA as well as leadership-class computational efforts sponsored by the Department of Energy. He received his doctorate in aerospace engineering from Virginia Tech.

Transcript

Ashley Korzun: Simulations that would take us weeks or months — or would just be too large to ever solve at all using conventional computing approaches — we’re now able to complete those simulations and get tangible, really useful engineering results in hours to days to weeks. So, it’s completely changing how we will approach the utilization, the fidelity of simulation.

Michelle Munk: This is the future – high-performance computing, supercomputing — really transforming our digital tools and applying them to greater problems is really, I see, the challenge of the next decade.

Eric Nielsen: We are going to be so reliant on computations and modeling and simulation going forward that our workforce has to have the opportunity to get the exposure and the training in those areas just in the same way that they do with fluids or structures or chemistry or any of these other areas.

Deana Nunley (Host): Welcome to Small Steps, Giant Leaps, a NASA APPEL Knowledge Services podcast featuring interviews and stories that tap into project experiences to share best practices, lessons learned and novel ideas.

I’m Deana Nunley.

NASA is using emerging state-of-the-art supercomputing resources to get ready to land humans on Mars. Today we’re talking with members of a team of scientists and engineers who successfully simulated retropropulsion – or engine-powered deceleration – for landing humans on the Red Planet – demonstrating revolutionary performance and a significant shift in how to approach the computational modeling and simulation of problems.

Let’s start with brief introductions.

Munk: Hi, I’m Michelle Munk and I’m the Entry, Descent and Landing System Capability Lead for NASA. And I work for the Space Technology Mission Directorate.

Korzun: And I’m Ashley Korzun. I’m a Research Aerospace Engineer from NASA Langley Research Center. And I work in the Atmosphere Flight and Entry Systems Branch predominantly on entry, descent and landing.

Nielsen: Hi, my name is Eric Nielson. I’m with the Computational Neurosciences Branch at NASA Langley Research Center, where we specialize in the development of large-scale computational fluid dynamics software.

Host: Thank you all for joining us on the podcast.

Munk: Thank you, Deana. Great to be here.

Host: Michelle, from an agency perspective, what hard problems are you trying to help solve in preparation for landing humans on Mars?

Munk: Well, there are many hard problems to solve, but my focus of course is on entry, descent and landing. And to land humans on Mars, we’re going to need several orders of magnitude improvement in many areas of EDL. To date, we’ve landed a MINI Cooper-sized rover on Mars with the Mars Science Laboratory. And, of course we have another one on the way — Mars 2020 Perseverance Rover — and those weigh about one metric ton. And for human landings, we need about 20 metric tons.

We need to do that very precisely to put the humans in a very particular location where they can do good science and set up a base camp if you might. And so we have lots of challenges to solve that are way advanced over the state-of-the-art.

Host: Eric, what technologies and tools are you and your team using to help solve these hard problems?

Nielsen: So, Deana, we come from the tool development side of things. And so we are trying to couple, the most advanced algorithms and numerical methods that are available, but we’re trying to couple those with the most powerful supercomputing systems that are available out there. So there’s been a tremendous kind of paradigm shift that we’ll talk about today in terms of supercomputing technology over the last five to 10 years. And our group is tasked with trying to harness that tremendous amount of compute to try and help tackle some of the real mission problems that Michelle just spoke about.

Host: Ashley, how would you say the work is progressing from an engineering perspective?

Korzun: Sure. So Eric mentioned that he and his team are largely on the tool development side and really getting us into a position to utilize efficiently these emerging state-of-the-art supercomputing resources. The folks that I work with were largely on the application side. So we need to be able to utilize these computational tools and methods that Eric’s team developing and apply them to the problems that Michelle has mentioned there as well.

So, from an engineering perspective, we’ve made huge strides just in the last couple of years. Overall, the entry, descent and landing, or EDL, discipline has made tremendous strides in the last 15 or 20 years or so, taking systems and ideas that were first conceptualized in the 1960s and 1970s, and really bringing them into the modern sphere and getting us ready to that eventual mission infusion for the landing of humans on Mars.

Host: Is this capability unique?

Nielsen: So, I do believe it’s certainly state-of-the-art in terms of aerospace computational fluid dynamics or the study of how a fluid flows or a gas flows around a vehicle. As we’ll dig into today, there’s many technologies available in the supercomputing space today. But kind of the flavor of the day right now is graphics processing units type of architectures, or GPUs.

And we were fortunate to get started in this particular space close to about 10 years ago and working very closely with one of the leading vendors, NVIDIA there. And we’ve just been very fortunate to be able to partner up with some really, really sharp people over the years who have come alongside us and help to get us to where we are today.

Korzun: I would just add that on top of what Eric just said in terms of uniqueness of this capability, we are now able to look at problems that are much, much larger than anything we’ve been able to look at in the past and it’s a direct result of the development of this capability. All of this is something we need to be able to run full-scale flight systems in a Mars environment.

Something Michelle mentioned, we’ve landed payloads that are on the order of about the size of a MINI Cooper, about one metric ton. We’re now talking about having to land systems that are more on the order of a two-story house. So just the physical size and the complexity of the problems we’re now trying to simulate are almost as unique as the capabilities we need in which to do those simulations.

Munk: Yeah, that’s a great point, Ashley. The vehicles that we’re looking at now have around a 15 or 20 meter diameter aeroshell out in front of them to help them slow down in the thin Mars atmosphere. In addition, parachutes are ineffective for these large systems. And so we’re using supersonic retropropulsion, turning on our landing engines at a much higher velocity and higher altitude than we’ve ever done before. And so those engine plumes going out into an oncoming supersonic flow is really a unique problem that we haven’t really looked at before.

Host: How would you characterize this shift in the computing approach?

Nielsen: So, over the last 15 to 20 years, the computational community has had it fairly straightforward in terms of the basic CPU technology that’s been available. It hasn’t changed all that much over the last decade or two. However, as we go to bigger and bigger, more capable systems, the constraints on power become a real looming consideration that the community has had to address. And essentially what this has forced is us to look at architectures, where we can’t just continuously turn up the clock speed and and essentially get a free lunch out of our codes just by buying a faster processor.

And so, what the vendors have had to do there because these clock speeds result in much higher power requirements, what the vendors have done is turned to vastly more parallelism instead. So many, many more processing elements operating at relatively lower clock speeds to get the improved performance out of the software. And so that becomes a real challenge for the programming community to adapt the software to operate on architectures which are substantially different. So in the past, we’ve had to parallelize or execute things simultaneously on the order of 50 ways on a given processor.

These days, for example, with GPUs we’re looking at parallelism on the order of 100,000 ways within a single processor. And then at scale, we’re approaching billion way parallelism right now in our software. And so it becomes a real, real challenge for the programmer to identify that many avenues of parallelism in an algorithm. Many of our legacy algorithms are serial in nature. And so putting them on an architecture that demands vast amounts of parallelism is a tremendous change in thinking and a tremendous challenge to implement. And so all of these things we have to learn how to deal with and learn how to address.

So along with that goes much more elaborate programming models. So, many programmers out there are used to coding their large-scale science codes in things like C or C++ or Fortran. Well, we need to be able to express many more directions of parallelism or other types of aspects of the code to help the architecture execute most efficiently. And so at the end of the day, quite often many codes are faced with complete rewrites of their software to be able to efficiently leverage these newer, more specialized architectures.

And finally, the programmer has to have a much more intimate relationship with the hardware itself. So the CPU technology over the years has been quite forgiving and it’s pretty straightforward to get decent performance out of those types of architectures. But going forward, we’re finding that the programmers have to be much more intimately familiar with the hardware and being able to express the algorithms as close to that bare metal as possible. And so this is a dramatic change in how we develop our tools for these types of systems.

Korzun: And I’ll just add there. So, let’s say you’re able to achieve what Eric and his team have been able to do in implementing these almost revolutionary approaches to computer programming and the integration of the software development with the hardware itself. When you look at how do we actually utilize that capability and what has that meant for us specifically, Michelle mentioned supersonic retropropulsion. This is an incredibly challenging problem for us. We have very large rocket engines on a very large vehicle flying faster than the speed of sound on another planet in a Martian predominantly CO2 atmosphere. How do we simulate that and how can we do that with the requisite fidelity to have a high degree of confidence that we’re going to be able to control this vehicle in an actual flight implementation?

So, it’s been completely game-changing, this computing capability that Eric has talked about here. A total paradigm shift in how we will be able to approach the computational modeling and simulation of problems, including these as well as other very large physically intensive simulations moving forward.

Specifically, in our case, we’re looking at simulations that would take us weeks or months or would just be too large to ever solve at all using conventional computing approaches. We’re now able to complete those simulations and get tangible, really useful engineering results in hours to days to weeks. So it’s completely changing how we will approach the utilization, the fidelity of simulation as we march toward those big exploration objectives in the future.

Nielsen: Ashley, I think that’s a great point about fidelity and maybe can you expand a little bit on the physical, the disparate scales in both time and space as well as the fact that we tend to think about fluids first and foremost, but how many disciplines are necessary to judge the viability of an aerospace system these days?

Korzun: Certainly, so we’ve talked about various length scales and time scales. When you’re doing entry, descent and landing at Mars for example, that entire process takes only about seven minutes from you going in excess of 10,000 miles per hour down to zero. You’ve got to be able to simulate everything that’s going on with that vehicle as it decelerates through the atmosphere and as you are guiding and controlling it into a very targeted location on the surface.

Michelle’s mentioned the scale of these vehicles, 15 to 20 meters in diameter. There are flow structures that can be important and affect what it’s going to take to control this vehicle that have length skills on the order of centimeters, as an example. And where’s that rocket engine exhaust going in front of the vehicle? Tens of meters. Almost a football field length upstream of the vehicle. And you’ve got to understand everything that’s going on there and how it affects the vehicle itself. That’s just the fluids perspective. Then you’ve got to think about that guidance, navigation and control element and all of this has a temporal component to it as well.

Flexible structures, thermal structural modeling. This is still a high heating entry environment you’re dealing with as well. So there are just so many different related physics disciplines that you eventually need to be able to bring into this modeling sphere. And these problems become computationally — they’re very, very, very large, but you have to do this work computationally as you’re just — these are not systems you’re ever going to be able to test completely in an Earth environment. You’re certainly not going to be able to fit these types of systems — again, we’re talking the size of a two-story house here — into our ground test facilities like our wind tunnels and our arc jets, and a lot of the other facilities we have at NASA centers around the country.

Nielsen: So, Ashley, I think that’s a great point that it’s hard to sometimes convey to the layman the computational requirements. So we may spend hundreds of millions of equivalent CPU hours to simulate a fraction of a second in a real problem like this. And yet we need to be able to fly this mission, Ashley just said it takes us seven minutes to descend down to the surface. And so the temporal and spatial scales are just very challenging here. And so that’s why we have to bring the big compute to bear.

Host: What are some of the other day-to-day challenges you face?

Munk: I think Ashley and Eric have endured the lion’s share of technical challenges, but from a programmatic standpoint, this is an effort to convert codes and to really get people, engineers, software developers working together who can really understand and apply these tools. This is all lead time of years before we actually have a working vehicle or even a demonstration vehicle.

So I think programmatically one of my challenges is to properly communicate the importance of this effort and the long-term nature of the effort to stakeholders, so that we make sure we’re getting proper attention and putting the proper amount of effort into these systems, even though the human landing on Mars may be a couple decades away.

Nielsen: Yeah. I’d like to echo what Michelle said. There’s a lot of moving parts here. Certainly the technical challenges in the tool development side of things are very challenging and things are constantly changing and it’s tough to keep up. But I find that much of my time these days is just devoted to the constant coordination with partners and all the moving parts. So we rely on a lot of partners not only within NASA across the different centers, but it’s been critical to have partners at say the Department of Energy or the vendors like NVIDIA or Department of Defense or academia, the very basic research with the faculty and the post-docs and the Ph.D. students.

And it really takes experts coming to the table from all different walks of life to kind of realize this capability. And so I find just the constant coordination of all the different pieces takes a lot of time. And I think sometimes that maybe gets lost in the wash. And another challenge I would say is things move so fast in this space that we find that sometimes the government processes are just not agile enough to move at a fast enough pace. And so we’ve got a lot of activities in the agency looking at trying to transform those types of processes and enable us to be a bit more agile in different directions.

Korzun: I would add just echoing on as well what Michelle and Eric have talked about at different levels when we talk about kind of integration and coordination across different groups, different activities. Just within the context of say the Mars architecture itself, it’s a constantly evolving landscape in terms of what our requirements on that system are going to be. We’re working with a variety of conceptual configurations and operational scenarios where how do I actually fly this system?

So being able to be responsive to those changes and almost getting to a point where we now have a computing capability to get out in front and use this computing capability to start influencing some of those design decisions much earlier in the process. It’s something that just when you start talking systems that are so complex on a timeline that is many years from now. This isn’t a system we’re going to be flying in the next four or five years as an example.

You do start to see those types of technical and integration challenges appearing more on a day-to-day basis, even in normal times. And as we all know, we’re certainly not living in normal times here in 2020. So while we do work with a large team that is geographically dispersed across the country, in the normal sense, things are certainly more challenging with everyone being in this work-from-home posture for COVID.

Host: And in spite of these challenges and the technical and integration challenges that you mentioned, what is it that’s making this effort successful?

Munk: I would say from my perspective it’s the grassroots efforts and the ingenuity of really folks like Eric and Ashley making these seemingly small, but at the same time very large strides and really giving us a really good test case that we can use as a demonstration of our success.

I think without the efforts that they’ve undertaken so far, we’d be five years behind. So they’ve done an enormous amount of work in a short amount of time, and it just takes really people connecting at the working level and having those great ideas.

Nielsen: I would completely agree. I think it’s all about the people and having the right skill sets on board. Establishing partnerships that again, that can come alongside us. NASA is not the center of gravity for the computational world. And so we’ve had to reach out and kind of establish a coalition of the willing to help us with these things. Perhaps leveraging the “cool problems” that NASA has at hand.

So quite often it’s about finding those people who want to work on neat problems of interest to NASA and who have expertise in some of these computing-oriented types of fields. And so for our group on the tool development side, it’s definitely been mostly about the people and partnerships to bring in the resources and the workforce skills that we need to give Ashley the tools that she needs.

Korzun: Yeah, and I would have to agree. It’s absolutely the people. The benefits of having the ability on the application side. So, say I’m the end user trying to simulate Retropropulsion, or I’m working with a team that is developing the entry heating environment so that we make sure we have the right thermal protection system on this vehicle when it comes time to fly. The ability for people like us to sit side by side with the developers and then bring in this really broad disciplinary base of experts at that grassroots, day-to-day working technical level has truly been invaluable. And that’s where we see these giant steps forward on the overall fidelity of what we’re simulating as well as our confidence in the performance that we’re predicting as we design these systems.

Munk: Yeah. And this is going to be so key going forward just to keep going in these types of efforts, because we’re not going to necessarily have all the tests facilities that we need. And as Ashley mentioned, we can’t test these systems end-to-end. So we’re going to very much rely on computational capabilities. And it’s going to take many, many teams of folks and many partnerships to really tackle the vastness of this computational problem that we have.

We haven’t even scratched the surface, really, of all the challenges. We talked about entry and retropropulsion and GN&C. We have other challenging problems like touching down on the surface, and how do the engines react with that surface? And how do we affect the environment around us? So, many challenges to come and we’ve taken this small but very significant step in that direction. I’m really excited to see what we can get done in the near future.

Host: And we’re seeing a lot of emphasis on digital transformation and model-based systems engineering across the agency. What are some of the lessons you’ve learned so far in this effort that could be useful to NASA program and project managers and our technical workforce?

Munk: I think Eric has been very involved with that. There was an incubator project at NASA Langley. I think that was very successful. And we look forward to using the digital transformation initiative to advance our specific work.

I think entry, descent and landing is going to be a valuable test case. And it’ll be a win-win for digital transformation to show its applicability to a really significant technical problem. And we will provide that significant technical problem for them. And digital transformation will help us meet our objectives.

Nielsen: What Michelle said there, and she specifically brought up the incubator — and so from the computational tool development side, that was an activity that we’ve had at Langley. It just kind of wrapped up. It was a three-year activity by design, but it was meant to provide especially our younger workforce — but a core group of people — it was meant to provide them with the white space and the top cover to get out into the computational community where we don’t normally get to spend a lot of time because we typically are attending domain-specific conferences and things like that so AIAA meetings and those kinds of things are absolutely necessary. We need to keep a foot in those camps, but all too often we do not have the opportunity to get out and just learn in a new discipline, in this case, computational science.

Giving folks the flexibility and the time to get to those forums and learn about the state of the art in those types of things. Because I think as we’ve already said that going forward, we are going to be so reliant on computations and modeling and simulation going forward that our workforce has to have the opportunity to get the exposure and the training in those areas just in the same way that they do with fluids or structures or chemistry or any of these other areas. And so, I’d like to see us be able to get our folks to those types of forums more often and give them the opportunity to be exposed to computational science and what’s out there.

Korzun: And I think from more of the boots-on-the-ground, technical workforce perspective, it has been very, very valuable not just for me individually, but for our entire team for us to have opportunities to share our work, but to also to do the work in that white space. So yes, we are supporting the larger projects in a very applied sense, but we’re being given the flexibility to pursue some of these what you might consider high-risk, high-reward types of research and development activities. And that’s what birthed this computational capability that is going to continue to be so enabling for us as we continue marching along that Mars development timeline.

But we have excellent support and I certainly hope that this would continue to be the case. There’s a genuine interest from our program and project managers and NASA leadership in keeping up with what we’re doing in this area. They like to see what the technical workforce is doing at a technical level. So kind of continuing to allow those types of presentations and those interactions to occur I think gives us the best chance to continue this success as we move forward.

Nielsen: I think another thing that that Ashley hit on a minute or two ago was just learning how to work in a distributed manner. Obviously we’ve been forced into that space with COVID most recently, but in our work over the last few years I think it’s always interesting to note that some of us are in Virginia at Langley. Some folks are at Ames. Ashley resides in North Dakota. Our computing for a lot of this recent work was done in Oak Ridge, Tennessee. And we meet routinely for post-run visualization support with a group in Berlin in Germany.

So, I think that really indicates that we need to learn how to work in a distributed environment and that has to accommodate these extremely large computations. Okay? We’re not just sending spreadsheets back and forth, but we’re sending data the size of the Library of Congress around multiple times a day. And so that in itself is a major challenge. And so just kind of transforming the workplace into a kind of global situation is a challenge. And I think we’re making headway there, but I think we can continue to strive to do better there.

Host: During our conversation today, we’ve talked a lot about entry, descent and landing in the context of Mars missions, but the capabilities we’re discussing obviously have broad application. What are some of the other areas where you are expecting or already observing significant impact?

Nielsen: So, the tools that we provide to Ashley’s group are provided to any number of groups not only within the agency, but outside of the agency to other government organizations, as well as just the U.S. taxpayer. So many of the large aerospace companies around the country use a lot of these tools. And so we really have the situation where we’re impacting many, many applications actually across many mission directorates and many speed regimes.

So, we’re talking about Mars today, but we’re working on many things. Things like high lift for commercial transports for example. Supersonic business jets for example. Any number of things. There’s groups using the codes and the tools for automotive applications for example. Reducing fuel burn on trucks for example. We’ve partnered with the Department of Energy in the past on that front. If nothing else, the work over the last few years has provided “an existence proof” for the ability to do these types of simulations on these newer architectures.

And that’s been I think very eye-opening for the aerospace CFD community. I think it’s had a big impact on other tool development groups not only around the U.S., but around the world that we’ve had interactions with because of this work over the last few years. It’s opened up many doors. We’ve got interesting work with DOD going on right now. Obviously, hypersonics is of interest to DOD. And so we’re doing some very interesting studies with them right now on advanced architectures.

And we’re also, we’re again, we’re seeing the industry base start to adopt some of these tools. So we had a company reach out to us recently. One of the major aerospace companies was in kind of a bakeoff situation with a new, a very large acquisition program for the DOD. And they recently survived a major down-select in being able to move forward on that vehicle development program. And they’re now eligible for a follow on contract of about a billion dollars with the Department of Defense and the lead selecting official for the DOD eventually came back and said publicly that they moved forward because of their ability to do much faster engineering.

And we received a very nice letter from the company afterwards saying they were able to do that because they had invested in this type of hardware technology and brought in some of our tools and that enabled them to do much faster engineering than some of the competitors. And so it’s really making its way into many, many niches and spaces across the aerospace industry at this point.

Munk: Gosh, Eric, that sounds like we need to use them as an endorsement for our efforts.

Nielsen: It’s been very exciting, no doubt. We’re very excited.

Munk: I guess I’d add that just within the agency, not only in entry, descent and landing, but we do a lot of work on materials and digital twins as well as cryogenic propellant management is starting to get a lot of attention for the Gateway and lunar missions, and that will continue on to Mars. And so those are two other areas that I’m seeing at least a need, and maybe we can provide that existence proof for them as well.

Host: In a technical arena that’s constantly moving and evolving, how do you keep up and get ready for what’s next?

Munk: Well, I guess from the programmatic standpoint, I’d say I think that’s one of the things that we’re really good at in the entry, descent and landing community is anticipating what’s coming and really advocating for and getting support for putting capabilities in place that are going to enable us to meet our mission goals. So I think this is a really good example of where we’re making a concerted effort.

Ashley is joining my System Capability Leadership Team for a few months to help formulate the next effort in this area so that we can broaden our efforts and take this example, this wonderful example case, and apply it to a greater number of tools in a greater number of disciplines over the next five to 10 years. So I’m really excited about the opportunity to do that.

Nielsen: I think computationally, we have to be constantly cognizant of the need to stay plugged into the broader community. It’s very easy for us to get lost in the day-to-day kind of milestones and internal 911 calls and so forth within the agency. But like we said a few minutes ago for computational technology, it is important to remember that NASA is not the center of gravity for supercomputing. And it’s very important that we are very proactive and stay plugged in to the broader community not only across the U.S., but internationally, and to be constantly looking out and being proactive about establishing new partners and finding folks who are willing and able to help us meet our mission goals internally. If there’s one thing I’ve learned is it’s not going to come and just land in your lap. You really have to go out and find these folks and these resources and kind of make it happen, take matters into your own hands. So that’s something I constantly have to remind myself is to stay on top of that type of thing.

Korzun: And we’ve talked about people. The theme of the people from across every level from your entry-level, discipline experts and people growing in our field all the way up through policy-making. It really comes down to the people. NASA is very good about engaging with students kind of all the way across their educational journey, so that we’re working directly with them. We’re building partnerships with universities and in a variety of areas.

We’re talking about systems and applications and missions and exploration goals, they’re just so huge and a little bit difficult to wrap your mind around sometimes. We’re not going to get there by ourselves. It’s going to take generations of workforce to get us there. So we’ve got to keep that pipeline full and be bringing in new folks, new institutions, new expertise, new ideas, most importantly, kind of at every step along the way.

Nielsen: That’s an excellent point, Ashley. Establishing pipelines years in advance for the next generation of folks, we’ve got to continue doing outreach. That’s absolutely critical. I completely agree.

Munk: Yes, definitely. And I think that’s one of the challenges I’m feeling from the COVID environment is not being able to go out in person and do outreach, although we do quite a bit virtually and it works OK. But in this area where you really want to reach a broad number of people, you want to make new connections across different organizations and create new partnerships, I think the face-to-face is very valuable and it’s something that we’re going to have to learn to work around at least for the near-term.

Host: This has been incredibly interesting. Thank you so much, Michelle, Ashley and Eric for joining us on the podcast.

Munk: Thank you, Deana.

Nielsen: Thank you, Deana.

Korzun: Yep, absolutely.

Host: Any closing thoughts?

Munk: I guess I would just like to say that I’m very excited about the future of this area. This is the future – high-performance computing, supercomputing — really transforming our digital tools and applying them to greater problems is really, I see, the challenge of the next decade. And I’m greatly appreciative of Eric and Ashley and their team and what they’ve done to really give us the vision of what we need to be achieving so that we can start moving more succinctly and in a more focused manner down that road.

Nielsen: I’d like to acknowledge folks like Michelle, folks in leadership positions who recognize the importance of basic long-term research. I think that’s critical to maintain our standing in the nation and around the world. And we couldn’t be where we are today without the strong advocacy and champions that we have such as Michelle. And I’ll call out Mujeeb Malik, our ST for aerodynamics at Langley, but folks like this enable Ashley and I to go out and do neat things.

Korzun: Yeah, absolutely.

Munk: Yeah. We’ll keep spreading the good word.

Korzun: I mean, I’d just say as a kind of I guess I’m still considered early career to a certain extent, but the journey has been incredibly exciting and seeing what’s on the horizon. Things are moving very rapidly in space exploration. As we’ve mentioned, it’s going to take people from all backgrounds, all disciplines, all levels of expertise to bring the ideas, to bring the innovation that we’re going to need to make all of these objectives realizable. It’s just an incredibly exciting time and something I think we’re all looking forward to see what the next 10 years hold.

Host: You’ll find links to topics discussed on the show along with Ashley, Eric and Michelle’s bios and a transcript of today’s episode at APPEL.NASA.gov/podcast.

If you haven’t already, please take a moment and subscribe to the podcast, and share it with your friends and colleagues.

As always, thanks for listening to Small Steps, Giant Leaps.