By Jerry Mulenburg

People make decisions, and people are fallible. So how can we make the best decisions in a particular situation given the information available? Crew resource management techniques designed for aircraft emergencies can help.

Some think of decision making as a logical, well-defined process and teach it that way in management courses and elsewhere. The evidence of practical experience shows, however, that decision making is seldom a precise, rational activity. In reality, it is often plagued with bias, misconception, and poor judgment. Decisions are often poor choices made for expediency or out of ignorance of alternatives. In my own experience, the person at the top in the hierarchy in most organizations is expected, or assumed, to be in the best position to make the important decisions. Having served in several different NASA Ames Research Center divisions, and as the head of two of them, I experienced firsthand the fallacy of this expectation.

As managers, we get in our own way sometimes because we don’t think enough and sometimes because we think too much. Thinking hard about the decision is not enough; we need to think about it in the right way. Rather than make us better decision makers, a lot of experience in an area can sometimes blind us to alternatives because of our investment in what we’ve done before. In his book, How We Decide, Jonah Lehrer describes the need to embrace uncertainty and new ideas in decision making: “There is no secret recipe for decision making … only … commitment to avoiding those errors that can be avoided. There’s not always time to engage in a lengthy cognitive debate. But whenever possible, it’s essential to extend the decisionmaking process [to] entertain competing hypotheses [and] continually remind yourself of what you don’t know.”1 Lehrer also warns that we are often most ignorant of what is closest to us. These ideas have special relevance to high-risk and other complex decision-making situations.

Decisions are often poor choices made for expediency or out of ignorance of alternatives.

For instance, what if you were piloting an aircraft full of passengers and suddenly ran out of fuel? How would you go about making the decision about what to do first, next, and, if none of these efforts solved the problem you’re facing, then what? This type of situation is so out of the ordinary, fortunately, that few people have experienced it, but such emergencies can and do happen. Thanks to a program called crew resource management, or CRM, decision makers can learn how to handle them.

Crew Resource Management

The best example of CRM in use may be the safe landing on January 15, 2009, of U.S. Airways flight 1549 by Captain Chesley (Sully) Sullenberger in New York’s Hudson River. Hitting a flock of geese on takeoff from New York’s LaGuardia Airport completely shut down both engines on his Airbus A320. With more than 19,000 hours of flight time, including flying gliders, Captain Sullenberger’s experience and training helped prepare him for the once-in-a-lifetime decision he faced. In less than three minutes, this is what Captain Sullenberger did:

- Requested permission to return to LaGuardia—approved (normal procedure)

- Performed engine restart procedure—engines didn’t restart (multiple attempts would have resulted in the same outcome

and time was short) - Asked for alternate airport-landing location in New Jersey—granted (wanted to avoid densely populated area near LaGuardia)

- Made final decision to land in the Hudson River—successful in saving all onboard (copilot provided airspeed and altitude readings for pilot to glide aircraft in)

As Sully put it: “I was sure I could do it. I think, in many ways, as it turned out, my entire life up to that moment had been a preparation to handle that particular moment.”2 During a talk about his experiences, Captain Sullenberger mentioned that he owed a lot to the CRM training he went through.

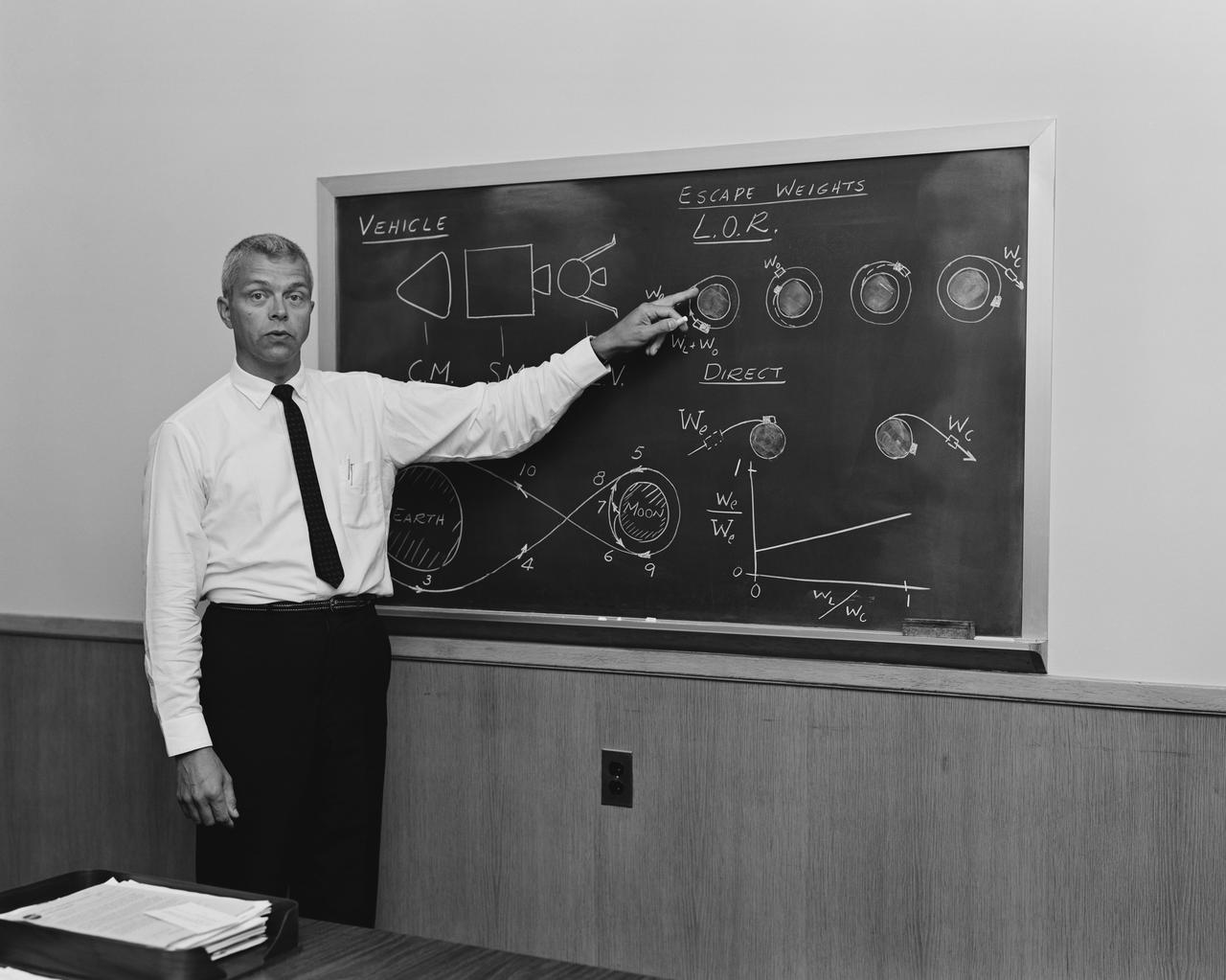

The CRM training Sully refers to is a program Ames developed in the 1970s in response to a number of flawed inflight decisions that resulted in aircraft crashes. CRM is now widely used in military and civilian aviation throughout the world. So what is CRM, and how can its lessons about decision making apply to complex project situations?

The driving idea behind CRM is to train aircrews in communication skills to maximize coordination and minimize the chance for errors. According to AirlineSafety.com, CRM is “… one of the most valuable safety tools we have today. It has contributed significantly toward the prevention of ‘pilot error’ accidents; it has saved airplanes and lives.”3 The basic tenets of CRM are to avoid, trap, or mitigate the consequences of errors resulting from poor decisions. To do this, according to CRM, it is essential to recognize that a problem exists,

- define what the problem is,

- identify probable solutions, and

- take appropriate action to implement a solution.

CRM techniques have been applied to other high-risk situations, including the high-stress environment of the operating room. Poor decisions are not simply due to surgeon error but also to the processes and systems that allow errors to remain undetected. CRM in the operating room has shown positive results due to improved communication, teamwork, error reduction, and better training of the whole team, reducing avoidable mortality rates.

One CRM trainer describes the CRM process as “see it, say it, fix it.” This is a good approach to problems on complex projects, including NASA projects.

Despite all the seasoned veterans working on a project (and sometimes because they trust their expertise too much), mistakes are made, omissions occur, and clear thinking is often displaced by a false sense of knowing what is going on.

Applying CRM at NASA

Flawed decision making was one of the root causes of the loss of the Challenger and Columbia Space Shuttles and their crews. It also contributed to the loss of the Mars Climate Orbiter, Mars Polar Lander, and problems on other projects. Despite all the seasoned veterans working on a project (and sometimes because they trust their expertise too much), mistakes are made, omissions occur, and clear thinking is often displaced by a false sense of knowing what is going on. For most complex projects, the decisions are not as monumental as for in-flight emergencies or surgery. But the mistakes matter and sometimes lead to the expensive and disappointing loss of mission or, tragically, loss of crew.

NASA would benefit greatly from applying CRM-like techniques to its complex projects. One of the things CRM does is integrate the knowledge and experience of all team members to arrive at a wise and robust decision. The acronym TRIM, which stands for team resource integration management, clearly describes the process. TRIM embraces the three CRM tenets of avoid, trap, or mitigate the consequences of decision-making errors, and helps ensure that the four CRM steps—recognizing that a problem exists, defining the problem, identifying probable solutions, and taking appropriate action—will be carried out effectively. “Integration” describes the need to integrate the whole team’s knowledge and skill into a final solution. TRIM also stands for terms that emphasize communication:

- Talk with each other

- Respect each other

- Initiate action

- Monitor results

CRM is already a mature discipline with clear benefits in high-risk situations that can translate to complex projects. Using its successful training methods, CRM seems ideal for application to project management decision making. I believe it is important that the project management community recognize the benefits gained by the application of the CRM/TRIM technique to reduce decision errors and the consequences of those errors. CRM is a systematic way of helping us use our collective cognitive skills to gain and maintain situational awareness and develop our interpersonal and behavioral skills to establish relationships and communicate with everyone involved, to achieve accurate and robust decisions. ASK readers responsible for managing complex projects can take the initiative to begin implementing the CRM/TRIM processes and provide feedback on their results.

About the Author

|

Jerry Mulenburg retired from NASA in 2006 with more than twenty-five years of distinguished service. He held positions as assistant division chief for Life Sciences, and division chief of the Ames Aeronautics and Space Flight Hardware Development Division and of Wind Tunnel Operations. A former air force officer, he is a Project Management Institute Project Management Professional and a trained MBTI administrator, and he currently teaches project management at the university graduate and undergraduate levels. He also provides training in project management as an independent consultant. |

More Articles by Jerry Mulenburg

- The NASA Fabrication Alliance: Cooperation, Not Competition (ASK 22)

- A Fly on the Wall (ASK 15)

1. Jonah Lehrer, How We Decide (New York: Houghton Mifflin, 2009).

2. Alan Levin, Pilot: I was sure I could land in river, USA Today, February 9, 2009.

3. Robert J. Boser, CRM: The Missing Link, November 1997, www.airlinesafety.com/editorials/editorial3.htm.