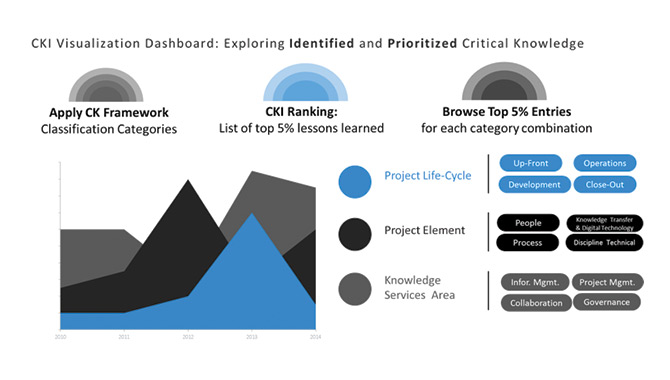

Figure 1: CKI Dashboard

Image Credit: NASA

It began with a project goal of recommending a method for identifying, capturing, prioritizing, and transferring critical knowledge.

This challenge of applying a continuous and formal effort to an abstract and largely conceptual knowledge asset was presented to a team of three graduate students of the Information and Knowledge Strategy program at the Columbia University School of Professional Studies.

The team assigned to the task—headed by Dr. Michael Bell, Chief Knowledge Officer of the Kennedy Space Center—included professionals spanning several industries. Rohit Bhatia is an Integrated Systems Team Leader for the Research and Development division at Corning Inc., Ronald Realubit is a Research Officer specializing in High-Throughput Screening for drug discovery research at the Columbia Genome Center, and Erika Vargas is a Knowledge Management Strategist at the Social, Urban, Rural and Resilience Global Practice at the World Bank in Washington D.C.

There were three overall objectives for the project:

- Ensure that NASA knowledge, which is at risk of being lost, could be discovered and evaluated

- Support the NASA workforce in successfully carrying out NASA’s missions

- Expand the reach and access of the agency’s intellectual capital across NASA’s enterprises, communities, and generations.

Building on the ongoing NASA critical knowledge initiative as outlined in the Critical Knowledge compendium and critical knowledge presentations by the NASA Chief Knowledge Officer, Dr. Ed Hoffman, the definition of critical knowledge is “…broadly applicable knowledge that enables mission success, stimulates critical thinking and helps raise questions that need to be addressed at various phases in a project life-cycle.” Furthermore, critical knowledge represents the top 5% of updatable knowledge that is most important for programmatic and engineering missions to learn and implement. Finally, it includes knowledge that keeps evolving toward new applications and missions, lending itself to a formal process for incorporation into appropriate policies and technical standards.

Now with an agency-wide definition of Critical Knowledge in place, the next step for the team was to develop a continuous and formal method for elevating knowledge generated at NASA to critical knowledge. The development of the Critical Knowledge Index (CKI) began as an exercise in scoring.

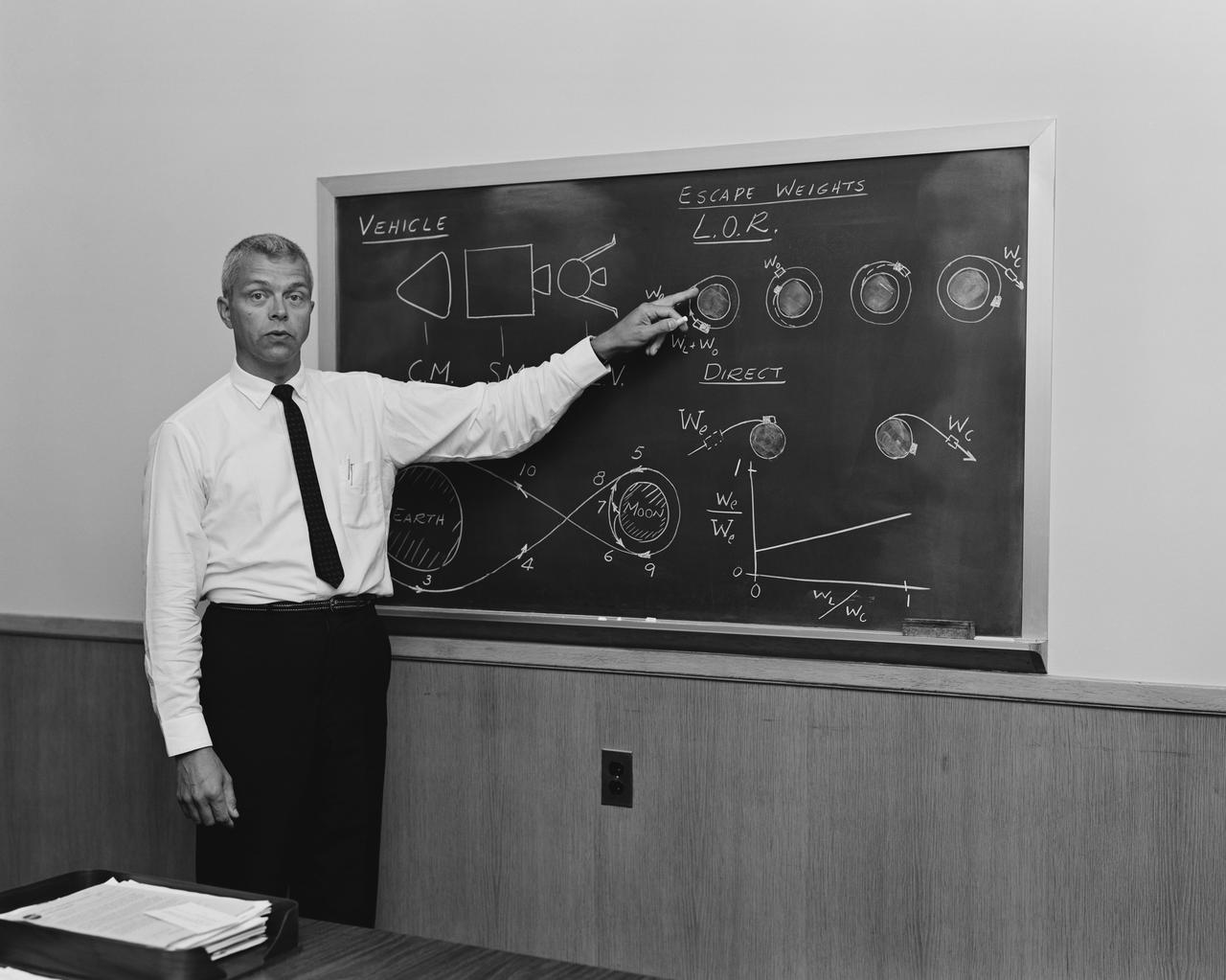

Figure 2: Scoring. Image Credit: NASAThe CKI uses a scoring system based on specific critical knowledge criteria and the scores allow for calculation of a quantifiable index that, in turn, enables ranking or prioritization. Before calculating the CKI, however, the knowledge would first have to be classified under three categories: Project Life-Cycle, Project Element and Knowledge Services Area. Following the structure of the Critical Knowledge Gateway initiative, Project Life-Cycle has four options: Up-Front, Operations, Development, and Close-Out; Project Element has four options: People, Process, Discipline Technical, and Knowledge Transfer/Digital Technology; and finally, the knowledge is placed under four appropriate Knowledge Services Areas: Information Management, Project Management, Collaboration/Network or Governance (See Figure 1).

The team chose lessons learned from the Kennedy Space Center as the unit of analysis to serve as examples of NASA knowledge for defining the set of CKI criteria and for developing the CKI formula. Under the direction and qualitative evaluation of Bell, the following criteria were developed for identifying knowledge as critical to all of NASA:

Risk (R): Situation, process, or behavior involving some exposure to danger (e.g. High Risk vs. Low Risk)

Broadly Applicable (BA): Extent to which knowledge can be deployed in diverse contexts across the agency (e.g., marginally applicable vs. broadly applicable)

Impact (I): Knowledge that has a substantial effect on enhancing project outcomes (e.g., Low Impact vs. High Impact)

Benefit (B): Comparative organizational advantage gained from acquiring knowledge (e.g. Marginal Benefit vs. Substantial Benefit)

Innovation (In): Viewed as the application of better solutions emanating from new knowledge that meets evolving requirements (e.g., Blind Spot vs. New Insight)

The scoring system would be on a scale from 0 to 5: “0” for Not at All, “1” for Minimally Relevant/Low, “2” for Somewhat Relevant, “3” for Average/Middle, “4” for Relevant/Applicable, and “5” for Definitely Relevant/Highly Applicable (See Figure 2)

Figure 3: Scoring. Image Credit: NASAThe following formula was used for calculating the CKI, stressing Risk and Broadly Applicable:

CKI = (1/4 • R) + (1/4 • BA) + (1/6 • I) + (1/6 • B) + (1/6 • IN)

(See Figure 3)

Lastly, the team envisioned a CKI dashboard for browsing through the knowledge and its calculated CKI. The user could apply filters on what categories they would like to see and the top 5% (based on CKI values) for the filters chosen would be displayed.

On December 3, 2015, at the Ames Research Center in Mountain View, California, the team presented its findings to the NASA Knowledge Community at an event called Knowledge 2020 (K2020). The most valuable outcome of the presentation was that it ignited a lively discussion among the NASA knowledge community about the overall concept of agency-wide critical knowledge.

The team successfully presented the difficult thought process of evaluating what is critical to the organization by introducing the CKI methodology. The team received thoughtful questions about the process, and the conversation was a testament to increased awareness of agency-wide critical knowledge. The knowledge community thought through the meaning of ‘Broadly Applicable’ knowledge at NASA and further discussion ensued about its applicability to a complex organization with deep specialties spread out in multiple Centers, Mission Directorates, and supporting organizations. There was also a comment on handling risk as a simplified number in a formula, which is a different approach (especially in an engineering organization), but this might aid in focusing on the knowledge’s overall value to the organization. The real value, in the team’s opinion, was the passionate and generative discussion this concept sparked. This illuminated the need for, and provided a stepping stone to, designing critical thinking in the way projects are carried out at NASA and to positioning the management of critical knowledge as a core value of the organization.

The team also shared with the NASA Knowledge Community that the CKI method could be customized by changing the classification categories, the criteria themselves for defining critical knowledge, and the weights assigned to these criteria in the CKI formula. This capacity for customization resonated among the community: some of the K2020 attendees expressed interest in experimenting with and implementing the CKI method in their own departments. A final comment the team received was that the CKI and CKI dashboard are basically a knowledge codification tool, and it could prove useful for an active community of practice at NASA.

The CKI remains a visual and applied implementation of capturing a difficult theoretical concept such as Critical Knowledge. With this method in place and in trial, NASA could differentiate itself as an organization that would at least have a system in place mitigating knowledge challenges such as organizational silence, lack of prioritization, and knowledge loss.

Rohit Bhatia is an Integrated Systems Team Leader for the Research and Development division at Corning Inc.

Ronald Realubit is a Research Officer specializing in High-Throughput Screening for drug discovery research at the Columbia Genome Center.

Erika Vargas is a Knowledge Management Strategist at the Social, Urban, Rural and Resilience Global Practice at the World Bank in Washington D.C.