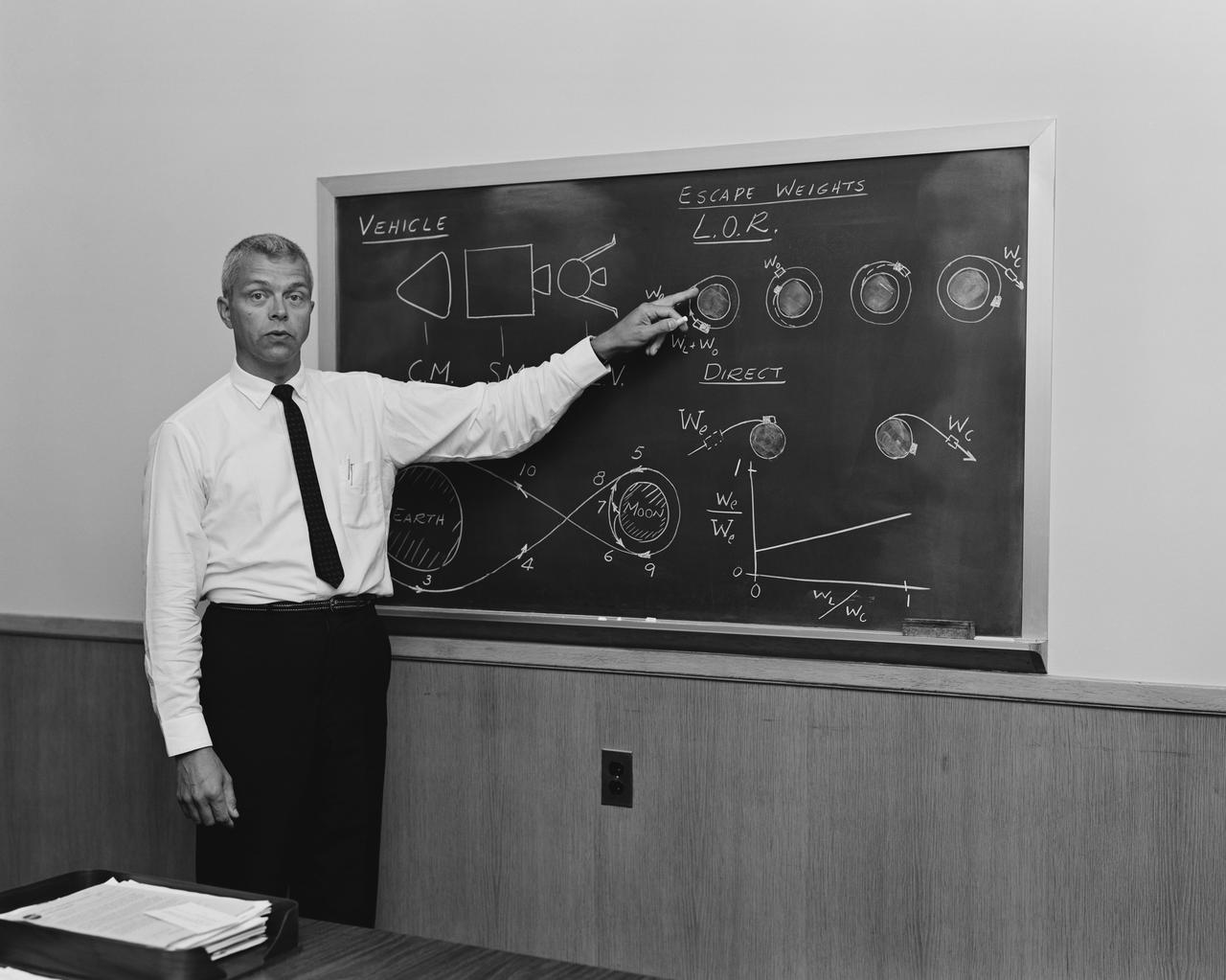

Clockwise from upper left: Lead, Statistical Engineering Team with the NASA Engineering and Safety Center (NESC) Ken Johnson, Managing Director at KNP Communications Matthew Kohut, Langley Research Center (LaRC) cognitive scientist John Holbrook, moderator Ramien Pierre, and Principal Engineer at the Jet Propulsion Laboratory (JPL) Steven Larson discussed issues and solutions for addressing the role of cognitive bias in engineering decision-making.

Credit: NASA

A recent Virtual PM Challenge explored the importance of raising awareness of cognitive bias in engineering decision-making and limiting its impact on NASA projects.

Over the life cycle of a project, NASA teams must make thousands of decisions as they develop, validate, and verify complex aerospace systems. These decisions range from choices about basic design to questions concerning which tests to conduct to resolving issues surrounding launch, landing, and reentry. But how can engineers be certain that the decisions they make are truly objective? This question was the subject of the NASA Virtual Project Management (PM) Challenge What’s Really Driving Your Decisions? How Cognitive Bias Impacts Engineering Decision-Making. During the event, experts discussed the issue of cognitive bias, how it can manifest for engineers, and ways to overcome it.

“When we make decisions, we tend to use shortcuts because we make tons of decisions every day,” said Matthew Kohut, Managing Director at KNP Communications and instructor of two APPEL Knowledge Services courses: Presentation Skills for Technical Professionals and Cognitive Bias in Engineering Decision-Making. “Some of those decisions require a lot of deep thinking and some of them we try to do more efficiently and quickly. And that’s often where we see cognitive biases come into play: when we’re using quick-term decision-making.”

Quick-term decision-making is a critical ability that humans use based on techniques known as heuristics: simple, experience-based approaches to learning or problem-solving. A heuristic is often expressed as a “rule of thumb” or an “educated guess.”

“Heuristic thinking is particularly useful in making decisions under uncertainty,” said John Holbrook, a cognitive scientist in Human/Machine Systems at Langley Research Center (LaRC). “This could be when you have incomplete information about the current state or future states. [Or] when the time available for making a decision is shorter than the time required to do the analyses of the available information.”

He added, “Heuristics are, in fact, pretty sophisticated reasoning tools that reliably help people solve everyday problems.”

Issues arise when a given decision necessitates greater attention, insight, or creativity than would be provided by an intuitive response or an educated guess. “I would define cognitive bias as a systematic mismatch between [a] heuristic decision rule and the decision context,” Holbrook said.

Cognitive bias is a built-in part of the human experience. At its worst, it represents habitual patterns of thinking that discourage new or creative thinking by prompting people to ignore the nuances that make each situation unique. However, cognitive biases are not always negative. Human beings have evolved to use them for a reason. According to Ken Johnson, Lead, NASA Statistical Engineering Team with the NASA Engineering and Safety Center (NESC), engineers rely on these predictive patterns of thought to solve problems all the time. “They’re [things] you know so you don’t have to go through relearning every time,” he said.

Steve Larson, Principal Engineer at the Jet Propulsion Laboratory (JPL), agreed. Cognitive biases are “patterns of decision-making. They’re really just patterns of neural firing in our brains,” he explained. “It’s neither good or bad. It’s a feature of how we are as human beings. How we function.”

Although cognitive bias is a natural part of the human condition, certain circumstances—such as when people are under stress or have limited time to fully examine the issues at hand—increase the likelihood that bias will occur.

“Stress…constricts your bandwidth, so to speak. Your cognitive bandwidth,” said Kohut. “There’s a lot of research to suggest that people who are under stress are going to make quicker decisions that are more prone to mistakes than people who have the time to really reflect on things and figure out the pros, the cons.”

Cognitive bias also happens more frequently when a decision must be made without adequate data. “If you lack information…essentially your brain will start making things up. It’ll fill in the gaps; it just does it automatically,” said Larson.

What form that bias will take depends on the person or people involved as well as the situation. Common types of cognitive bias include optimism bias, groupthink, and anchoring bias.

“Optimism bias is simply the preference toward thinking that things are going to work out for the best,” said Kohut. A common example occurs during proposal development: the people involved believe that they can complete a project for a specific cost, even though doing so may be predicated on everything going right—a rare occurrence. A related phenomenon is bias based on past success. If something worked before, people believe it will work again—even if the context is different.

Another aspect of optimism bias is overconfidence. “When we become familiar with something, we tend to believe that we know more about it than we really do,” said Larson. This can lead to making assumptions that are not based on data specific to the given situation.

Groupthink is a social form of bias involving multiple people. Typically, when people work on something together and converge on an answer, the majority tend to move increasingly in the same direction. Those who don’t support the group’s decision may feel ignored or as though they can’t voice a dissenting opinion. This, in turn, can jeopardize the project because when a group only validates aligned views, there is little consideration of whether those views are based on appropriate assumptions or accurate data.

Anchoring bias is another common form of bias, in which people become “anchored” to the first figure they hear or a particular result they expect. When more information is introduced, people may devalue it in favor of focusing on that initial number or expectation.

In an example drawn from his own experience, Larson described a situation in which “the entire team, myself included, became anchored on a particular result.” It began with an assumption the team made about how two pieces of hardware would interact. “It was anchored on an initial analysis, and we proceeded for a long time—all the way through development in the test program—on the basis of this assumption. And so, naturally, because we had discussed the problem with the…team, the test team inherited this bias, this anchoring bias. It was only by dumb luck and the introduction of a test engineer who was completely unfamiliar with the design that we stumbled onto a fatal flaw in the design that up to that point had never been tested because no one could think of testing that—because we’d already ruled it out, we thought, during the design process a long time ago.”

When a team becomes anchored on a particular result, they often—unconsciously—stop thinking critically about the problem. “Once that happens,” said Larson, “everything you build on top of that is flawed.”

Fortunately, tools exist to limit the negative impact of cognitive bias on engineering decision-making. An important first step is to raise awareness of the existence of biases.

“The beginning of this is simply familiarizing yourself with some of the biases,” said Kohut. One approach is to read about both biases and heuristics. “It’s worth thinking about which are affecting your personal decision-making and which are going to come into play in more of a group setting.” The more people know about biases and heuristics as well as about their own proclivities, the better able they’ll be to ask themselves questions that get at the heart of the matter, such as: Am I appropriately using this heuristic because I’m dealing with unknowns or is there bias in my thinking that could be distorting the issue?

As part of this reflection, individuals may find it useful to keep a journal of their decision-making activities and results. “You have to be honest with yourself about how you’re doing because you will make better decisions by tracking how well you made decisions” in the past, said Kohut.

In a group setting, becoming aware of biases can also be part of the process of mitigating them. This approach is used by Johnson at the NESC to devise engineering test plans that leverage individual biases in order to fully explore the issues impacting a particular decision.

“Our engineers are really good at just seeing a problem and solving it. They’re so smart, so creative,” he said. However, “everybody on a team sees that problem differently and will approach it differently.” He suggested acknowledging the different biases by inviting each team member to articulate the problem from the perspective of their area of expertise. As each team member does so, the biases become clear to the group and the team can come to consensus about the best integrated approach.

“You’ve acknowledged the biases. You’ve gotten agreement. You’ve stated the problem to be solved and now you can compare your methods, your responses, your measurements to that problem,” said Johnson. This allows the team to take two critical steps forward in developing engineering test plans: “Defining the problem well enough to solve it and then solving the problem.”

In addition to the work done by Johnson in developing engineering test plans, NASA has incorporated multiple cognitive bias-limiting tools into its development and review processes. These include the Joint Confidence Level (JCL), “Red Teams,” and a formal dissenting opinion process to ensure all voices are heard.

The JCL asks teams to determine the likelihood of achieving a particular goal. “That’s in some way asking people to reexamine the assumptions they made,” said Kohut. “Another way that a lot of NASA teams will do this kind of thing is the “Red Team” idea or “Murder Boards”…where you get experts who are outside of a project or a team to look at your ideas with fresh eyes.” He added, “Getting those fresh perspectives is a huge way to help mitigate biases.”

Larson agreed about the value of bringing in people with an independent perspective. “You’re trying to mitigate the fact that the original people who did the development are biased,” he said.

To help project teams counter groupthink, Kohut suggested that they consider doing a “pre-mortem.” As they are approaching a major decision, the group can pause and write a letter to themselves as though it were a year in the future. The letter states that they made the decision in question—and things went terribly wrong as a result. In the letter, the team must dig deep to analyze the possible weaknesses inherent in their proposed decision in order to explain why the project failed.

“That’s a really powerful exercise because it forces you to…reexamine that groupthink. And it also gives the pessimists a voice,” said Kohut, which is especially important if anyone in the group felt as though they couldn’t speak up because of social pressure. Overall, the exercise expands the space for an objective discussion, encouraging the team to reevaluate their choices and assumptions.

Another key to reducing the risk of groupthink is to build teams with diverse individuals. This increases the breadth of experience applied to a given problem, which in turn limits the likelihood that the entire group will have similar biases or heuristic blind spots.

Ultimately, cognitive biases are unavoidable because they are built into the way human brains function. “We think in patterns and there’s no way to escape that. Whether these patterns manifest as biases or actually solve problems for us is sort of a matter of context,” said Larson.

On the plus side, cognitive biases play a positive role in problem solving by helping people make decisions without having to relearn things over and over. The key for engineers at NASA and around the world is to leverage the positive aspects of cognitive bias while mitigating the negative effects by maintaining awareness on the individual level and instituting processes to mitigate their effects on an organizational level.

To learn more about the challenges and solutions surrounding cognitive bias, watch the NASA Virtual PM Challenge What’s Really Driving Your Decisions? How Cognitive Bias Impacts Engineering Decision-Making, now available online.

To receive email notifications about upcoming NASA Virtual PM Challenge sessions and recorded session availability, please subscribe to the Virtual PM Challenge email list.