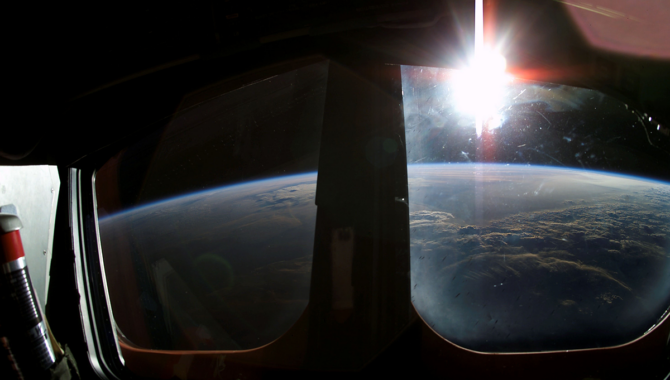

On 22 January 2003, the crew of STS-107 captured this sunrise from the crew cabin during Flight Day 7.

Photo Credit: NASA

Continued vigilance is required to maintain an organizational culture that supports critical knowledge sharing.

[1] This story was originally published in NASA History News & Notes: 20 Years After STS-107.

The lessons NASA learned from the loss of Columbia and its crew were profound. Documentation, insights, and reflections from the accident are available via the NASA History Office[2] and in a comprehensive new website from the Office of Safety and Mission Assurance’s Apollo, Challenger, and Columbia Lessons Learned Program.[3] Although these lessons are often encapsulated in specific, actionable nuggets of information, the lessons that most inform our culture tend to be unwritten. After Columbia, much closer scrutiny was applied to NASA’s organizational culture, the echoes of which continue to resonate and shape the Agency’s ways of working and learning today.

Space shuttle Columbia was lit up at Launch Pad 39A on the night of 5 March 1981 as preparations for its maiden voyage, the STS-1 mission, were being made.

Photo Credit: NASA

While mourning the Columbia tragedy, NASA was prompted to reconsider its previously accepted organizational practices, a necessary introspection that was urged by the Columbia Accident Investigation Board (CAIB). Chartered in the first two hours after loss of signal,[4] the 13-member CAIB worked for almost seven months with more than 120 staff and 400 NASA engineers and reviewed thousands of documents and other inputs to analyze the factors that led to the accident.[5] The CAIB intentionally expanded its purview beyond technical cause to make assessments on organizational culture, including historical factors, decision-making processes, and unintended consequences of organizational and management practices.[6] Many of the Agency’s challenges had been acknowledged prior to the STS-107 Columbia flight but were not prioritized or resolved, while others were not sufficiently understood.[7]

The CAIB identified four problematic cultural elements that they linked to the Columbia accident: the assumption that prior success could be taken as evidence to expect continued success; organizational issues that inhibited candid communication; institutionally siloed management practices; and informal authority structures that worked outside the formal system.[8] The CAIB compared these with best practices and made recommendations to correct the challenges they observed. Many of these recommendations have proven evergreen and remain in place as priorities for the Agency 20 years later. The establishment of NASA’s Technical Authorities,[9] the creation of the Office of the Chief Engineer’s NASA Engineering and Safety Center[10] as a source for independent assessment and expert knowledge, and the Office of Safety and Mission Assurance’s leadership to address organizational silence and reinforce safety culture are traceable to the CAIB report.

“The cultural lessons from Columbia continue to guide how we share knowledge and how we apply our expertise to our missions.”

The CAIB also identified challenges with how NASA learned and applied its lessons,[11] citing a 2001 report by the General Accounting Office (GAO).[12] At this time, NASA’s approach to lessons learned concentrated on the use of information technology systems to collect and store lessons in a semi-structured format. While new technologies suggested new opportunities, the GAO subtitled one section of a later report, “Information Technology is Important, but Should Not Be the Only Mechanism for Knowledge Sharing.”[13] The Aerospace Safety Advisory Panel reinforced the need for a more comprehensive strategy in 2011 with a recommendation for the Agency to create a Chief Knowledge Officer to serve as a focal point and champion for knowledge sharing practices[14]. Both my predecessors in the Agency Chief Knowledge Officer role noted the importance of taking a federated approach to this responsibility, recognizing that NASA needed to manage local knowledge in its own context, while building capacity to share critical knowledge across the Agency as appropriate.[15] NASA has also expanded the lessons learned construct in recent years to include processes related to team review, recording, disseminating, and application of these lessons.[16]

(Left to right) Bryan O’Connor, Amy Edmondson, Mike Ryschkewitsch, and Robin Dillon share insight into organizational silence on a panel at Goddard Space Flight Center on July 31, 2012.

Photo Credit: NASA/Goddard Space Flight Center

In addition to lessons learned, oral histories, classroom discussions, mentoring, and other knowledge sharing activities are crucial to NASA, because much of what engineers and project teams must learn and apply on the job is impossible to capture in a database.[17] As Steven R. Hirshorn, Chief Engineer for Aeronautics at NASA Headquarters, writes, engineering leaders at NASA need to know the “systems engineering process, oversee the process, and be an advocate for its value to the project. The Chief Engineer must also be able to use their experience and judgment to implement it appropriately for their project.”[18] Competencies like these are crucial to any field, but the lessons of Columbia remind us that even those with the greatest technical acumen need to have a supporting culture to apply and share their knowledge effectively.

When tragedies occur, a healthy organization does not normalize the accident or shame the participants: it seeks to understand why the accident occurred and, if possible, to prevent it from happening again. The cultural lessons from Columbia continue to guide how we share knowledge and how we apply our expertise to our missions. As members of an organization that is committed to learning and improving, NASA’s people must combine the facts we know, the lessons we learn as a team, and the common values we practice together. In this way, NASA’s knowledge and organizational culture requires continued vigilance and maintenance. We must do this not only when it is easy, but when it is hard.

Citation

[1] Thank you to Stephen J. Angelillo, Michael Bell, Steven R. Hirshorn, Kevin Gilligan, and Zachary Pirtle for sharing their insights at different stages of this article’s development, and thank you to the NASA History Division for the kind invitation to participate in this issue.

[2] https://history.nasa.gov/columbia/Introduction.html

[3] https://sma.nasa.gov/sma-disciplines/accllp/columbia#history

[4] NASA, “Columbia: Investigation,” Office of Safety and Mission Assurance, 2023, https://sma.nasa.gov/sma-disciplines/accllp/columbia#investigation.

[5] Columbia Accident Investigation Board (CAIB), “CAIB Report”, Volume I, Washington, DC: August 2003, page 9.

[6] CAIB Report Volume I, Part 2, p. 97.

[7] One source that identified many organizational challenges similar to those identified by the CAIB was the NASA Integrated Action Team’s report, “Enhancing Mission Success – A Framework for the Future,” December 21, 2000, https://history.nasa.gov/niat.pdf.

[8] CAIB Report Volume I, Chapter 7, p. 177.

[9] NASA, “NASA Policy Directive 1000.0C: NASA Governance and Strategic Management Handbook,” effective date 29 January 2020, https://nodis3.gsfc.nasa.gov/NPD_attachments/N_PD_1000_000C_.pdf, section 3.5.1.

[10] https://www.nasa.gov/nesc

[11] CAIB Report Volume I, Chapter 7, p. 189.

[12] GAO Report, “Survey of NASA Lessons Learned,” GAO-01-1015R, 5 September 2001, https://www.gao.gov/assets/gao-01-1015r.pdf. The topic received a more detailed treatment in the 2002 follow-up: GAO Report, “Better Mechanisms Needed for Sharing Lessons Learned,” GAO-02-195, January 2002, https://www.gao.gov/assets/gao-02-195.pdf.

[14] NASA Aerospace Safety Advisory Panel, “Aerospace Safety Advisory Panel Annual Report for 2011,” Washington, DC: 2011, https://oiir.hq.nasa.gov/asap/documents/2011_ASAP_Annual_Report.pdf p. 12.

[15] Forsgren, Roger. Lean Knowledge Management: How NASA Implemented a Practical KM Program, Business Expert Press, 2021; Hoffman, Ed and Jon Boyle, R.E.A.L. Knowledge at NASA, Project Management Institute, Newtown Square, PA, 2015, https://www.pmi.org/-/media/pmi/documents/public/pdf/white-papers/real-knowledge-nasa.pdf.

[16] https://appel.nasa.gov/lessons-learned/

[17] See especially: Polanyi, Michael, Personal Knowledge: Towards a Post-Critical Philosophy, University of Chicago: 2015 (1958); Collins, Harry, Tacit & Explicit Knowledge, University of Chicago, 2010; Vincenti, Walter G., What Engineers Know and How They Know It, Johns Hopkins, 1990, 198–199; and Petroski, Henry, To Engineer Is Human: The Role of Failure in Successful Designs, New York: Vintage, 1982, p. 81–83.

[18] Hirshorn, Steven R. Three Sigma Leadership: Or, the Way of the Chief Engineer, Washington, DC: NASA, 2019. https://www.nasa.gov/connect/ebooks/three-sigma-leadership_detail p. 51.