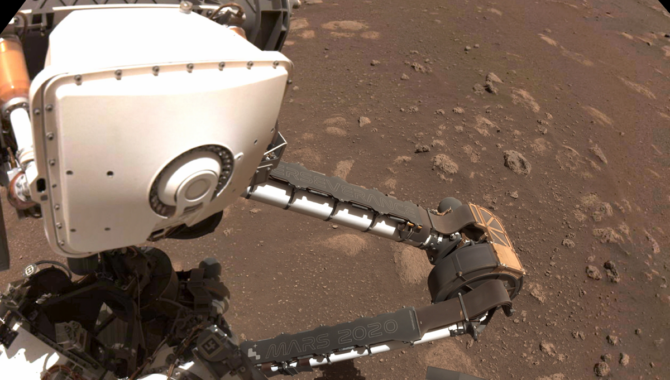

PIXL, the white instrument mounted on the robotic arm of NASA’s Perseverance Rover, uses a form of artificial intelligence known as adaptive sampling to examine rocks on the surface of Mars.

Photo Credit: NASA

Teams are creating AI tools to find new craters on Mars, forecast algae blooms, estimate hurricane intensity, improve weather and climate models, and track the smoke from wildfires.

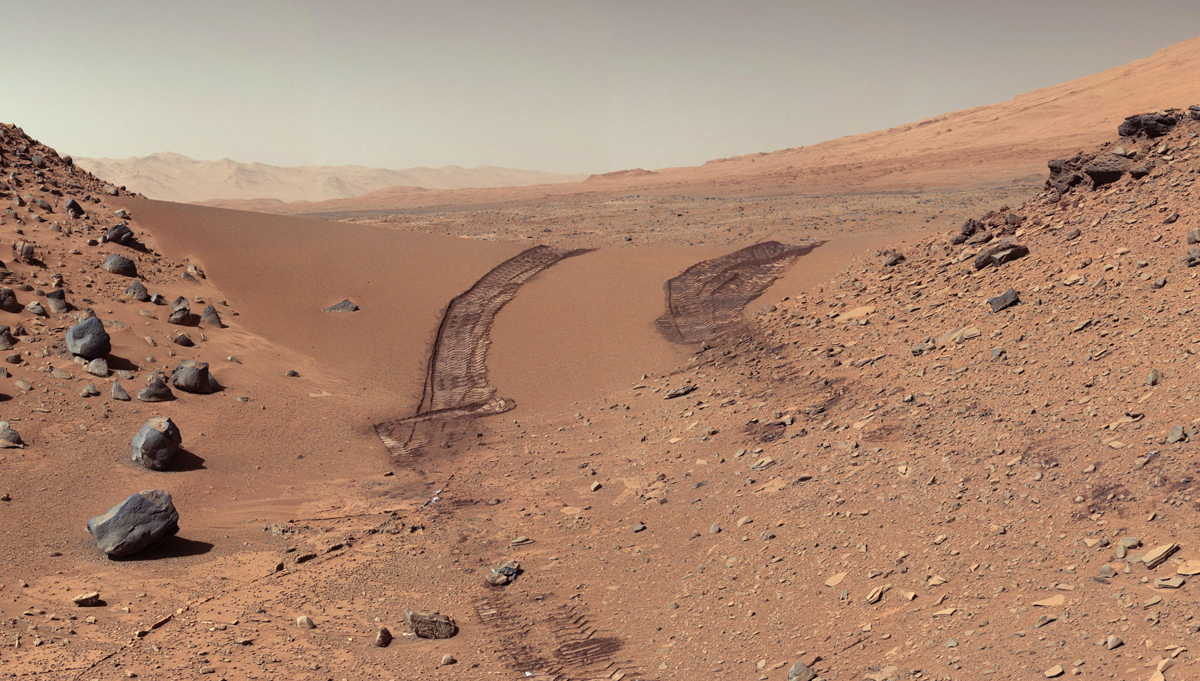

As NASA’s Perseverance Rover explores Mars, scouting Jezero Crater for rocks with mineral traces that could indicate the planet supported microbial life billions of years ago, one of the key tools it uses is PIXL, a lunchbox-size X-ray spectrometer mounted at the end of the rover’s robotic arm. The instrument is so precise it can home in on something as small as a single grain of salt. It’s the first instrument on Mars to use a form of artificial intelligence (AI) known as “adaptive sampling.”

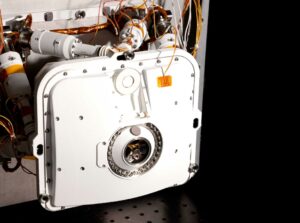

The Planetary Instrument for X-ray Lithochemistry (PIXL) opens its cover during testing at NASA’s Jet Propulsion Laboratory. Photo Credit: NASA/JPL-Caltech

PIXL, an acronym for Planetary Instrument for X-ray Lithochemistry, uses adaptive sampling to move autonomously into position near a rock and scan it for target minerals. When AI determines that the initial scan has found promising results, adaptive sampling guides PIXL to perform a “long dwell,” gathering more information. Without adaptive sampling, the team would have to search for these mineral traces in reams of data, then rescan key rocks for more detail.

“At NASA, unsurprisingly, science and technology organization, [artificial intelligence] is not just a buzzword,” said NASA Deputy Administrator Pam Melroy, speaking at a town hall meeting to discuss AI earlier this summer. “We have already harnessed the power of AI tools to benefit humanity by safely supporting our missions and our research projects, analyzing data to reveal underlying trends and patterns and developing systems that are capable of supporting spacecraft and aircraft autonomously.”

A series of executive orders over the past five years has guided federal agencies in setting policies and goals for the development and use of AI. Most recently, on October 30, 2023, an executive order called for the “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.”

“The rapid speed at which AI capabilities are advancing compels the United States to lead in this moment for the sake of our security, economy, and society,” the order states. “In the end, AI reflects the principles of the people who build it, the people who use it, and the data upon which it is built.”

NASA Public Affairs Specialist Melissa Howell, left, NASA Chief Scientist Kate Calvin, NASA Chief Technologist A.C. Charania, NASA Chief Artificial Intelligence Officer David Salvagnini, and NASA Chief Information Officer Jeff Seaton, right, participate in a NASA employee town hall on how the agency is using and developing Artificial Intelligence (AI) tools to advance missions and research, Wednesday, May 22, 2024, at the NASA Headquarters Mary W. Jackson Building in Washington. Photo Credit: NASA/Bill Ingalls

NASA leaders at the town hall addressed the emergence of generative AI tools capable of using data to create text, images, videos, and more. These tools have become easily accessible to the public as their content reaches quality levels that can be difficult to distinguish from the work of humans. While the tools have great potential, they can produce content that is false or deceptive or triggers copyright concerns.

“The AI is not accountable for the outcome. The person is, the human is, right? And the vendor who may offer an embedded AI capability as part of a product suite is not accountable as well. The user is. The person who leverages that tool is responsible. So, we have to first own that, and we have to understand what responsibilities come with us,” said David Salvagnini, NASA’s Chief Artificial Intelligence Officer.

“So, then how do we be safe about this? We understand our responsibility as the ultimate accountable person as it relates to our work products,” Salvagnini said. “And then if we happen to use AI as part of the generation of a work product, that’s fine, but just understand its capabilities and limitations.”

Even the earliest NASA missions generated vast amounts of data, challenging the resources of the teams charged with finding items of scientific interest, and inviting the metaphor of a needle in a haystack. As technology advanced, the haystacks of data grew larger, and the needles harder to find.

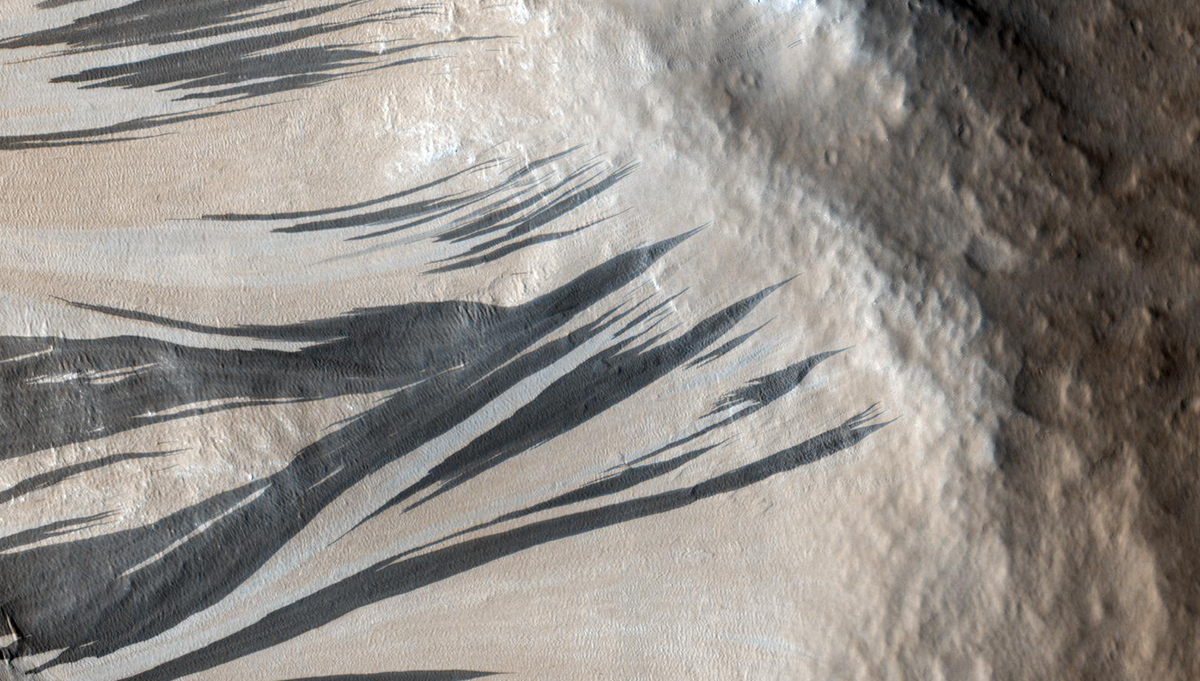

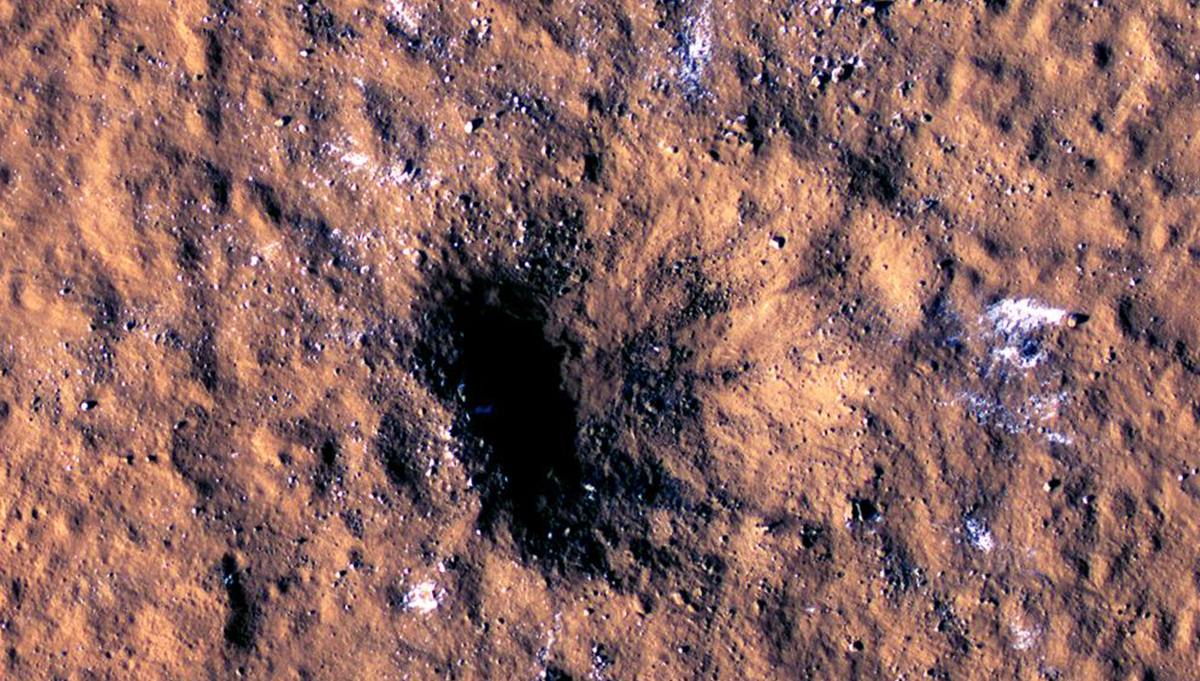

For instance, the Mars Reconnaissance Orbiter (MRO) has been orbiting the Red Planet for more than 18 years. It carries an instrument known as the Context Camera, which takes low-resolution images that each cover hundreds of square miles. It takes a researcher about 40 minutes to search one of the images for the telltale signs of an impact. When they find a rare hit, they then find corresponding images from MRO’s High-Resolution Imaging Science Experiment (HiRISE) to see the impact zone in detail and determine if it formed a new crater on the Martian surface.

JPL researchers have developed an AI tool capable of working through 112,000 low-resolution images with a collection of supercomputers, working in concert. The AI tool can scan an image for the signs of a new crater in about 5 seconds. In 2020, scientists used HiRISE to confirm that an area identified by AI was, in fact, a fresh crater about 13 feet in diameter.

“So, scientists have been using AI for a long time, and one of the things that AI is really good at is analyzing large data sets, like the data that we get from our earth-observing satellites, our space telescopes, or our other science missions. One of the ways that we use AI [is] what scientists like to call anomaly detection or change detection,” said Kate Calvin, NASA’s Chief Scientist, speaking at the town hall. “Essentially, when you look through data and you look for something that looks distinct, a specific feature. Once you’ve identified that feature, you can count it, you can track it, you can avoid it, you can seek it depending on what your goal is.”

NASA’s Earth observing satellites have amassed a vast and growing amount of data—an estimated 100 petabytes. NASA teams have developed AI tools to forecast algae blooms, estimate hurricane intensity, evaluate coral health, count trees on large swaths of land, improve weather and climate models, and track the smoke from wildfires.

“We use this throughout science. In the science mission directorate, we do things like counting exoplanets. It’s the same type of thing that we do with counting trees, but now we’re looking out in the universe and seeing what else is out there,” Calvin said.