By Marty Davis

The seventeen-ton Compton Gamma-Ray Observatory (or CGRO), launched aboard the space shuttle Atlantis on April 5, 1991, was, at the time, the heaviest astrophysical payload ever flown.

It returned data to astronomers for nine years, detecting gamma rays from sources including black holes and our own sun and providing content for literally thousands of scientific papers. In June 2000 it was safely de-orbited and re-entered Earth’s atmosphere.

So the project to build the observatory was a success. But that does not mean it did not have problems, including some serious ones. As Instrument Systems Manager and then Observatory Manager for the project, I got to see both what we did well and what we could and should have done better. Although work on the CGRO began some two decades ago, I believe that our experience offers important lessons to managers of large projects today.

The success of the variety of subsystem and instruments we developed was determined, in large part, by the quality of management. Having the right technical expertise is essential, of course, but large, complex projects like this one stand or fall on how well the work of the many parties involved is coordinated, on sensibly allocating resources of money and time, on identifying and solving small problems before they become big ones — in other words, on project management skills.

A Slow Start

The Gamma-Ray Observatory project got off to a slow start. A study phase began in 1978. Three years later, CGRO was confirmed as a project, but sufficient money to begin the work in earnest was not allocated until two years after that, in 1983. This leisurely pace gave the team the false impression that they had plenty of time to get organized and do the work. The unprecedented size of the project made it difficult to predict how much effort it would entail. Many members of the project team had worked on small, rocket-launched satellites, building instruments in what was almost a hobby shop atmosphere. Even though we knew that designing and building a device so much larger than anything done before would be more challenging, we underestimated just how much additional work that difference in size would generate. Scaling up doesn’t mean the same only larger. It creates new technical problems that are difficult or impossible to foresee (and therefore difficult to schedule and budget). In this particular case, it meant moving from the “hobby shop” to more formal design and manufacturing environments. It dramatically increased the number of tasks, organizations, and groups that project leaders had to coordinate, evaluate, and support.

Not recognizing the true extent of the work resulted partly from never having experienced a project this big and rejected a dangerous optimism that we often bring to our projects. A can-do attitude is important, but underestimating the effort needed because you assume this project will be free of the problems and inefficiencies that plagued past projects is dangerous. Listen to the wisdom of experience, including rules of thumb (spend 50 percent of your costs by Critical Design Review), past experience (it took 150 people in the past to put a large observatory through integration and test), and common sense (is it really practical to have one group of people analyze and design hardware and software, another group build it, and a third group test it?). We did not always do that, assuming, for instance, that 100 people could do the integration and testing and asking three separate groups to design, build, and test hardware and software components.

On the positive side, we took time early on to document expectations in detail and develop schedules, budgets, descriptions of responsibilities, and fiscal monitoring tools. This preparatory work helped avoid countless future disagreements about tasks and resources. It put us in a position to measure our progress against clearly defined goals and schedules. And it mitigated, to some degree, the unforeseen problems that inevitably arise in long projects. Ours took thirteen years from study phase to launch. During that time, one design engineer died and others retired or turned their attention to other work. Even good, detailed documentation could not entirely make up for the expertise lost in those cases, but it allowed those who took over their roles to get their bearings quickly.

So one important lesson learned is this: Invest time and effort in thorough planning and documentation up front. Doing so will save you time, money, and headaches later on.

Of course, you should be sure to use the management tools you put in place. We often ignored them, explaining away variances, schedule slips, interrelationship disconnects, and technical problems. When our earned-value measurement system told us that the cost of work accomplished so far indicated that we were overspending and underperforming, our gut feeling was that we had workarounds to solve the problem, but the system was right.

Four Instruments, Four Management Stories

Four different instruments included in the observatory were developed by different groups at a variety of locations. Each of these elements of the project was managed somewhat differently from the others. Their circumstances suggest that there is no one, right model for managing large projects, plenty of ways to go wrong, and some key ingredients of success.

Instrument One

One of the instruments had three co-Principal Investigators located at three separate institutions. NASA Headquarters tried to alleviate the tension among them by making one scientist responsible for the entire instrument. Though the participants agreed to this arrangement, it did not eliminate the problems. In reality, the assigned Instrument Manager was in charge of only half the relevant work at his center and none at the other institutions. Assisted only by a coordinator, he shared secretarial, procurement, and financial support with others in his section. So a $40 million instrument was being managed by a two-man team that only actually controlled a third of the work.

One institution sharing the workload was a university that had no experience building flight hardware, but the Instrument Manager had no experienced manpower to assign to the university. Basically, the plan was to have the funds to clean up the eventual mess that all the managers knew would happen.

Center management also had a skewed perception of the complexity of the instrument, which was prevented from flying on its original mission due to budget cuts. They had repeatedly been shown a sketch of it created for that cancelled mission. When the Instrument Manager’s center sold the instrument to this new project, they gave the impression — which proved to be false — that extensive engineering backed up the drawing. So management failed to realize how much work needed to be done to build this complex instrument until the project was under way.

In this case, divided responsibility, insufficient manpower, and lack of clarity about the complexity of the task led inevitably to problems.

Instrument Two

The second instrument had only one manager with total responsibility. That avoided issues of divided accountability, but “total responsibility” ended up being the problem. The manager was a very capable section head who also worked on other tasks. He did his own financial, scheduling, and procurement work. He worked hard and convinced himself that he knew everything happening with his contractors. He didn’t, but he was so overloaded with work and working so hard to stay on top of it that he was unaware he had lost control.

The project office took up the issue of lack of management support with one of the instrument organization’s senior managers. A detailed analysis was put together and discussed with him, but the senior manager pushed for a management consultant to study the situation. After three months of observation, the consultant agreed with the project office’s assessment. The report was filed and ignored, however. Eventually, continuing problems spurred a meeting of NASA headquarters, center management, and the instrument organization’s senior management, but it came too late to avoid the problems poor management had caused.

Here, an overcommitted manager and the failure of senior management to respond to a problem quickly and decisively were the source of the difficulties.

Instrument Three

A third, simpler instrument also suffered from some management problems, but it had what turned out to be the advantage of being delivered from one NASA center to another. Centers feel a certain (usually friendly) rivalry and need to maintain their reputations in the eyes of the other centers and NASA headquarters. Our project office once brought problems at another center working on the project to the attention of headquarters. The center responsible for the instrument responded by working hard to strengthen its management. If Instrument One had not come from the same center that also housed the project office, reputational pressure might have resulted in a faster resolution of problems.

Instrument Four

The development of the fourth instrument should not have worked, but it did. Hardware responsibility was divided among four different groups with five funding sources from four different countries. But the team took major steps to promote teamwork and a shared understanding of their task early on. They agreed to put their egos aside, support each other’s efforts, and follow the lead of the principal investigator. The instrument was managed by a committee, but the committee acted as a single unit.

Even this effective team experienced two setbacks. First, a shortage of funding caused them to choose hardware over system engineering support early in the design phase. This contributed to later technical performance issues. These problems exposed a second issue. No clear lines of responsibility for hardware integration had been laid out with the contractor responsible. In comparison with the issues of poor management that plagued the project’s other instruments, these two problems, spread over eight years of collaboration, were relatively minor.

Some Lessons Learned

Despite the many problems suggested, the project was highly successful and yielded outstanding science for almost a decade. As is often the case at NASA, people found ways to do the work and do it well. But recovering from our mistakes was costly and took a toll on participants. Our excellent Project Manager came very close to burning out two of his highest performers. (There is a lot of literature on how to motivate poor performers but not much on how to tell when you are driving your “workaholics” too far.)

Some of the most important lessons of the CGRO project are these:

• Spend ample time up front for detailed, clear, realistic planning. The effort will pay benefits throughout the life of the project.

• Work hard to provide resources appropriate to the complexity of the project. It’s expensive to try to do work on the cheap.

• When problems arrive, deal with them quickly and decisively. Trying to explain them away or ignore them makes them worse.

• Communicate, communicate, communicate. The success of the work on Instrument Four came from continual, extensive communication; many of our problems were due to poor communication.

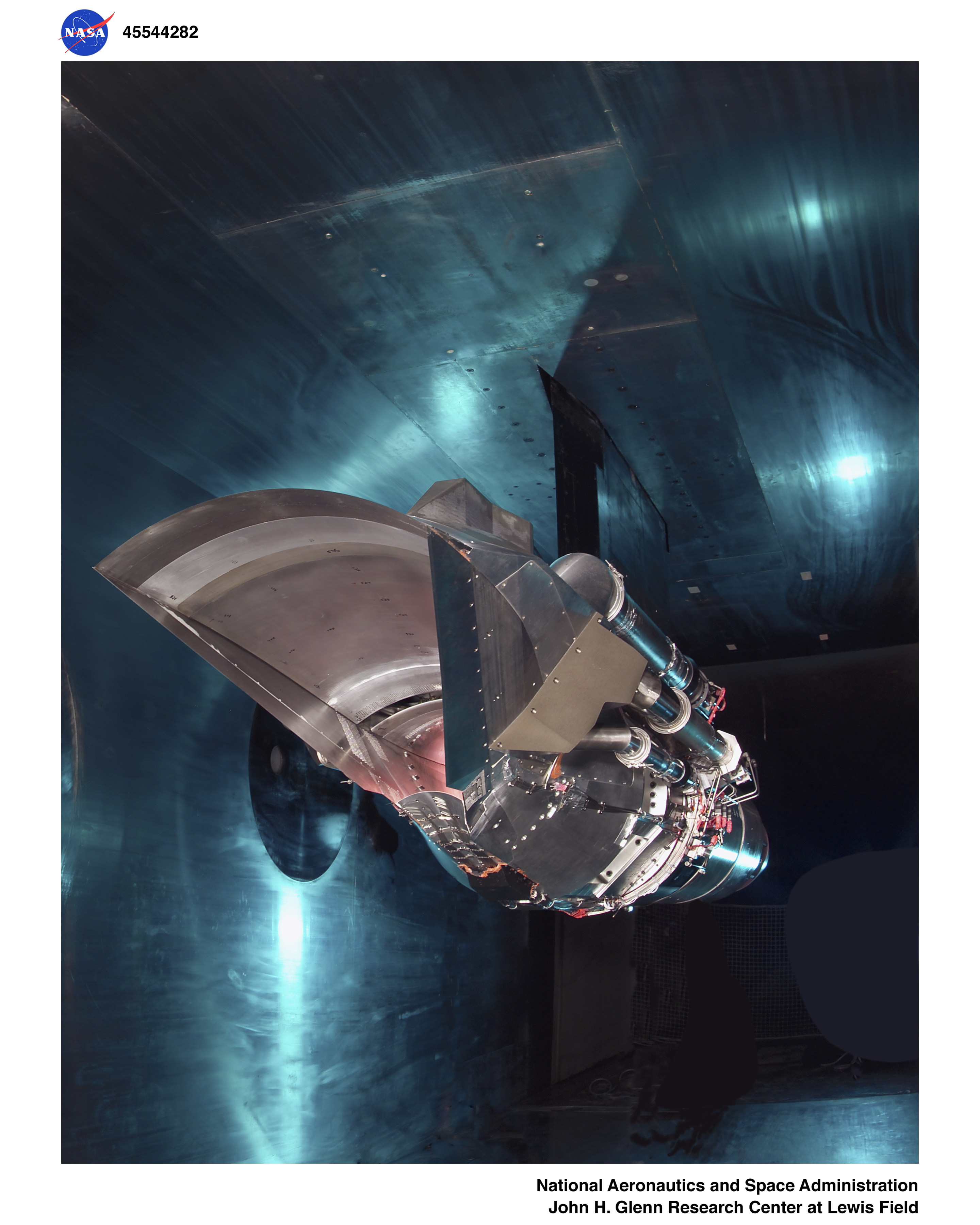

The Gamma Ray Observatory (GRO) with its solar array panels deployed is grappled by the remote manipulator system April 11, 1991. This view taken by the STS 37 crew shows the GRO against a backdrop of clouds over water on the Earth’s surface.