Don Cohen, Managing Editor

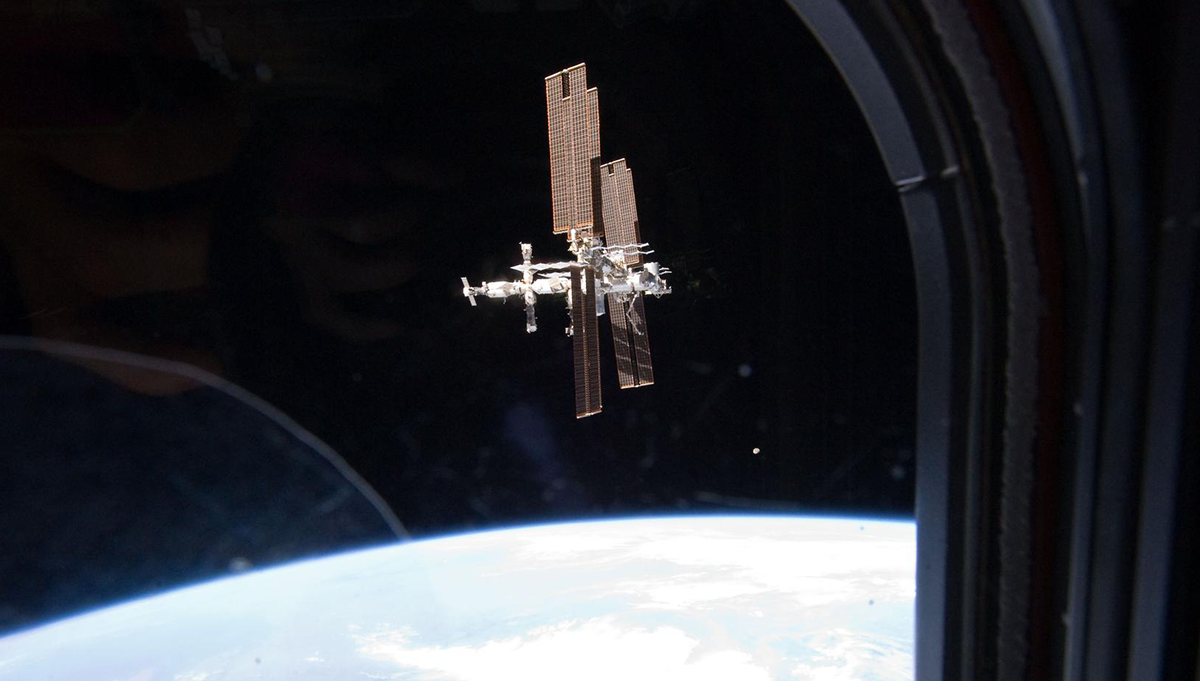

At the Academy’s Masters Forum in April, the word “risk” turned up in many presentations and discussions: how to anticipate and mitigate risks; how to learn from risks that turn into real problems; how much risk is acceptable in robotic and human space flight. Underlying all that attention was recognition that NASA’s missions are inherently risky. Appropriately managing risk is one of project teams’ core responsibilities.

At the Academy’s Masters Forum in April, the word “risk” turned up in many presentations and discussions: how to anticipate and mitigate risks; how to learn from risks that turn into real problems; how much risk is acceptable in robotic and human space flight. Underlying all that attention was recognition that NASA’s missions are inherently risky. Appropriately managing risk is one of project teams’ core responsibilities.

Risk is a focus of this issue of ASK, too. In “Juno: Making the Most of More Time,” Rick Grammier describes how his team has used an unusually long definition and planning phase to evaluate and address risks. Extensive prototyping and testing of instruments and comprehensive discussions between engineers and scientists should, Grammier thinks, reduce risks common in complex science missions.

The problem that nearly turned the Hubble telescope into worthless space junk—an improperly ground primary mirror—was so basic that no one saw it as a likely risk. Frank Cepollina’s “Applying the Secrets of Hubble’s Success to Constellation” looks at the design for in-orbit servicing that made it possible to rescue Hubble and says that designers of NASA’s next generation of launch vehicles and spacecraft should follow Hubble’s lead. One of the lessons of Hubble is that unanticipated problems are likely to occur. Counterfactual thinking, recommended by Gerstenmaier, Goodwin, and Keaton, can help predict some of them. Questioning assumptions and analyzing the effect of past decisions are among the techniques that can uncover hidden risks.

Because dealing with risk is central to mission success, Dave Lengyel at the Exploration Systems Mission Directorate has been linking risk management and knowledge sharing to ensure that lessons transferred from one project to another are the ones that really matter and that NASA’s knowledge management efforts are not an example of what he calls “collect, store, and ignore.” Charles Tucker’s related articles (“Fusing Risk Management and Knowledge Management” and “Managing—and Learning from—a Lunar Reconnaissance Orbiter Risk”) explain Lengyel’s work.

Those articles bring up the other important theme of this issue: devoting your efforts to what really matters, or what we might call “mission pragmatism.” Just as Lengyel’s knowledge-based risk approach captures lessons that count, the knowledge harvesting process Nancy Dixon and Katrina Pugh describe includes careful determination of which projects will generate knowledge that justifies the effort and expense of identifying and communicating it. “Infusing Operability” shows how practical wisdom gained from Kennedy Space Center’s long history of launches is contributing to new vehicle designs that will make ground operations more efficient and reliable. Ancona and Bresman’s “X-Teams for Innovation” stresses the importance of project teams that know how to get the external knowledge and support they need to do their work. And articles about the Applied Physics Lab (by Svetlana Shkolyar) and the Applied Meteorology Unit (by Carol Anne Dunn and Francis Merceret) emphasize the importance of the word “applied.” Both organizations develop technologies that respond to clear and critical user needs and directly contribute to mission success.

Finally, there is Tony Kim’s story of an unmanned aerial vehicle science project (“To Stay or Go?”), which combines the themes of risk and pragmatism. When a range safety officer’s aversion to risk makes flight permission unlikely, the team sensibly moves the project elsewhere.