By William H. Gerstenmaier, Scott S. Goodwin,

and Jacob L. Keaton

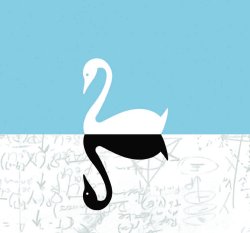

Before the English explored Australia in the 1600s, it was held as an indisputable fact in Europe that all swans were white. This “fact” was based on empirical evidence stretching back for thousands of years that grew stronger as each observation of a white swan confirmed the belief that only white swans existed. Yet all that evidence was invalidated by a single observation of a black swan in Australia. In time, the black swan became a metaphor for things that weren’t supposed to exist, yet did.

Author Nassim Taleb uses the story of the discovery of the black swan and how it demolished millennia of prior evidence to describe events that were not thought possible:

A Black Swan event is a highly improbable occurrence with three principal characteristics: It is unpredictable; it carries a massive impact; and, after the fact, we concoct an explanation that makes it appear less random, and more predictable, than it was.

Today, we take black swans for granted and may not grasp why Europeans had such difficulty coming to terms with their existence. We may be more prone to experiencing extreme, unexpected events than the people who lived before us because of the complexity and interconnectedness of the technologies we use, and we can easily find examples of Black Swan events in our time. Such a list would include both the Challenger and Columbia accidents, the events of 9/11, the collapse of the Interstate 35W bridge in Minnesota, and many of the events that have roiled world financial markets in the past decades. Given our experiences with extreme events, it is natural for us to want to identify them and try to predict when they will happen.

We often attempt to do this by plotting the probability distributions of events along a bell-shaped curve whose narrow “tails” at either end represent outlier events, supposedly rare and unlikely to happen. It is likely these tails are thicker than the typical bell curve would suggest and some of those outlier events are much more prevalent than we imagine. There is also the question of whether a bell curve, or any other distribution model, is appropriate to determine whether an event is likely to occur. The randomness you find in a casino is limited and well understood; the casino knows how many cards are in a deck, how many faces on a die and, because all possible outcomes are known in advance, it can calculate the odds of any particular outcome occurring. In the world outside the casino, we don’t know all the possible outcomes, nor do we know precisely how many cards are in the deck. In many cases we may not even know how many decks there are.

We often attempt to do this by plotting the probability distributions of events along a bell-shaped curve whose narrow “tails” at either end represent outlier events, supposedly rare and unlikely to happen. It is likely these tails are thicker than the typical bell curve would suggest and some of those outlier events are much more prevalent than we imagine. There is also the question of whether a bell curve, or any other distribution model, is appropriate to determine whether an event is likely to occur. The randomness you find in a casino is limited and well understood; the casino knows how many cards are in a deck, how many faces on a die and, because all possible outcomes are known in advance, it can calculate the odds of any particular outcome occurring. In the world outside the casino, we don’t know all the possible outcomes, nor do we know precisely how many cards are in the deck. In many cases we may not even know how many decks there are.

When unexpected events do occur, we often dismiss them because they did not have a significant impact and we think the chances that they will occur again are even more remote. Harder to dismiss are outlier events that almost occur and that would have had a serious impact; these near misses should not be dismissed lightly. Such events are “gifts”—nothing bad really happened, and they provide a tremendous opportunity for learning. We can use these events to brainstorm similar situations in other systems and rethink our assumptions and models, but we must overcome two common biases first.

When an extreme event occurs, an investigation is initiated to determine exactly what happened and why. When you see all the data available to you after the event, you can build a story of how it all fits together and you end up with an explanation that makes complete sense. This leads you to believe the event was predictable—that is “hindsight bias.” But it’s difficult before the event occurs to be perceptive enough to know this kind of event may be sitting out there and ready to occur and to grasp how it might play out. Once causes are identified and thought to be understood, we conclude that if only we had done x, y, and z, the event wouldn’t have occurred. Then we institutionalize x, y, and z in our plans and processes to prevent the same thing from happening again. Such changes will not protect us from other extreme events, and implementing these changes as rigid procedures inhibits learning, adaptation, and growth. We end up “following the flow chart,” and we think less actively about what we’re doing and why.

Confirmation bias leads us to form beliefs that are based on repetition, not on analysis or testing. Each recurrence of an event serves to confirm our view that the event will happen again in the same way. Every recurrence that has no serious negative consequences confirms our view that the event is not dangerous and may lull us into accepting it as normal. The Thanksgiving turkey has a thousand days of earned-value metrics behind it; as far as it knows, the day after Thanksgiving will be just like the day before it. Good metrics do not ensure success tomorrow.

These biases cloud our view; we need ways of seeing through the haze. One way of combating our biases and helping defend against potential Black Swan events is through counterfactual thinking. Counterfactual thinking means imagining alternative outcomes to past events. It can be practiced by continuously asking “what if” questions about what might have happened instead of what actually did. It can identify potential risks or solutions to problems that can then be analyzed and tested.

When Endeavour suffered tile damage due to a piece of foam breaking away from a bracket on the external tank during launch on August 8, 2007, the common-sense decision was to repair the tile damage in orbit. Instead we made the decision to return with the tile damaged, and a lot of people could not understand why.

What we did was use the Orbiter Boom Sensor System to create a three-dimensional model and then fashion an exact copy of the damaged tile here on the ground. We tested this model analytically and by simulating re-entry using an arcjet and assuming worst-case heating. We saw some tunneling in the tile and some charring of the felt pad, but the structure underneath was undamaged and would withstand re-entry.

This test allowed us to say conclusively that this would be the worst-case result of not repairing the tile. It was a known condition, unlike what might happen if we attempted to make the repair. The actual result was not as bad as the worst-case scenarios and testing showed us, so it was a good decision.

We used the same process of asking “what if” questions that is at the heart of counterfactual thinking. And this type of thinking can be applied in all areas of program and project management, including budget processes, property disposal, transition management, and overall decision making.

We manage one-of-a-kind projects that entail significant risks, known and unknown, that can have an enormously adverse impact on our outcomes. As project and program managers, what can we do to prepare for unexpected events? No one can answer that in a definitive way, but there are strategies that will help us prepare for and manage the unexpected.

- Purposely induce a counterfactual mind-set prior to major decisions or significant meetings, perhaps by reviewing past close calls. Actively challenge assumptions to look at high-impact risks that supposedly have a low probability of occurring and brainstorm possible scenarios that could entail those risks. Don’t pick sides; let the data drive and flavor the discussion. Then translate the results into productive actions by planning for those risks in your program or project.

- When you do risk management, step back and really brainstorm, pushing the envelope in your risk matrix; ask what else might happen and what effect that might have. But do not become paralyzed by what you discover: risk is real and unavoidable. It is better to discuss and think about it than be totally unprepared for a Black Swan event.

- Even if your project or mission is going well, when earned value looks good, the schedule is being met, and the budget is healthy, ask what could cause a problem that could dramatically change the outcome of your project or mission.

- Recognize that the conventional wisdom of the group is not always correct. We need to guard against groupthink, staying aware of our natural tendency to jump to the same conclusions and move in the same directions. Assign folks to look at non-problem areas that are high risk so we aren’t all focusing on the “problem of the week.” Be creative in thinking of ways to analyze and test possible issues in these high-risk areas to better understand them. Probe the boundaries, test to failure, and know the true margin of the real systems.

- Pay closer attention to anomalies and other unexpected events, not just near misses, and ask what could have happened or what might happen next time. Perhaps we should approach all unexpected and outlier events as near misses, at least initially. Some may be indications of a Black Swan event in the future, and we should treat these as precious learning opportunities.

- When you or others create explanations for events, don’t fall in love with the hypotheses and seek out supporting data. Instead, assume that a hypothesis is wrong and look for data to refute it; search for that which is counter to what you want to happen. The goal is to find the best answer with the data available at the decision time, not your answer or the group’s answer.

- Improve your predictive and decision-making capabilities by regularly reviewing your past decisions. Capture the data available at the time of the event or decision. Was the information needed to predict the outcome in the available data? Did we fail to collect or analyze it? If the data did not exist, is there a way to create or capture it so it is available next time? Did we capture the proper assumptions we made at the time of the decision? Was the data just not available at the decision time?

- Keep a sense of humor. Always looking for negative consequences and dangers can skew your sense of proportion and balance and will take an emotional toll. Maintain a positive perspective and remember that all problems are positive challenges.

We must believe in our abilities to succeed with our projects and missions and at the same time do everything we can to uncover extreme negative events that can cause failure before they happen. We have to move forward with certainty in what we intend to achieve, and at the same time prepare for the unexpected by doubting everything.

The risks we identify now may not be the risks we need to be the most concerned about, but we can’t be honest about risk if we don’t accept our fallibility and recognize that we have biases that skew our observations and analyses, or if we suppress dissenting opinions. Acknowledging and talking about these issues openly and directly is a good first step.

References:

Nassim Nicholas Taleb, The Black Swan: The Impact of the Highly Improbable (New York: Random House, 2007).

Nassim Nicholas Taleb, Fooled by Randomness: The Hidden Role of Chance in Life and in the Markets (New York: Random House, 2005).

A. D. Galinsky and L. J. Kray, “The debiasing effect of counterfactual mind-sets: Increasing the search for disconfirmatory information in groups,” Organizational Behavior and Human Decision Processes, 91 (2003): 69�81.

About the Author

|

Jacob L. Keatonis a policy analyst in the International Space Station office in the Space Operations Mission Directorate at NASA Headquarters. |