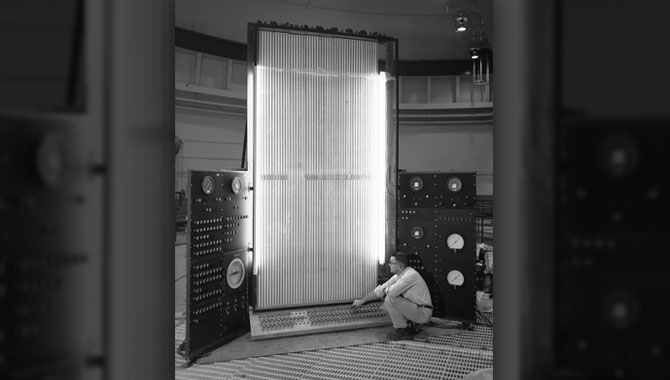

Data computing equipment at reactor at NASA Plum Brook Station circa 1960.

Photo Credit: NASA

One question I repeatedly receive from employees at NASA is, “Why can’t I ever find what I need?” People are accustomed to instant access to information from the World Wide Web through the multitude of search engines available. They cannot understand why it is so difficult to replicate this ease of access at work. The short answer: numbers. Google, for example, indexes billions of URLs and documents and accepts 5 billion queries per day. Those huge numbers make it possible for them to continually refine their search capabilities. At Johnson Space Center (JSC), our search infrastructure indexes less than 1/10 of our known data and accepts a few thousand queries per month. Even if we could afford to index all the millions of documents JSC has, I am not convinced our search results would greatly improve, because the query sample set would remain small. Given this problem, the NASA-JSC Knowledge Management office has embarked on a project to improve the accessibility and visibility of critical data at NASA. The project is broken down into three phases:

- Understand the employee base.

- Research and evaluate search methodologies.

- Provide data-driven visualization.

The need to improve search at NASA is clear and critical. A recent report by IHS, a company providing business information and analytics, underscores my greatest concerns for the NASA workforce. It reveals that an engineer’s average time spent searching for information has increased 13 percent since 2002. Additionally, 30 percent of total R&D spent is wasted on duplication of research and work previously done, and 54 percent of decisions are made with incomplete, inconsistent, and inadequate information. I fear the problem is only growing as the quantity and complexity of information continue to increase. According to Gartner, the world’s leading information technology research and advisory company, the amount of available data will increase 800 percent by 2018 and 90 percent of it will be unstructured. Our project seeks a NASA-specific solution to the problem.

UNDERSTANDING THE NEEDS

To understand the employee base, we conducted interviews to understand their needs and frustrations. We learned that employees with different common job profiles search differently. For example, a scientist or researcher typically explores data looking for common themes and connections, while an engineer or project manager is more likely to be looking for specific answers to pressing questions. A semantic approach—one that considers context and related terms to determine meaning—is more useful for the former, while a computational or cognitive approach is more useful for the latter. Our team then set out to develop use cases for the identified job profiles to determine what they searched for, how they wanted results presented, and where the content they searched for was located. That process led us to identify both the data critical to our users and the authoritative sources for the data.

METHODOLOGIES

Our benchmarking revealed that, on average, technical professionals must consult 13 unique data sources to get the information they need to make informed and consistent decisions. Understanding the different search methodologies has been crucial to making improvements. We worked with a number of vendors, developers, and search gurus to evaluate the capabilities of semantic, faceted, cognitive, and computational search with the use of natural language processing and advance text analytics, comparing their search results to our current keyword search engine. The team began to realize there is no single solution for search that will meet all the needs at JSC. All of those approaches have their pros and cons; which of them are best in a given situation comes down to the type of query and the data being searched.

We arrived at some important conclusions:

- A master data management plan is essential. A common process for metadata, format, and structure has to be implemented to improve the results of the search query.

- With the quantity of data growing exponentially, focusing on identifying our critical data is imperative.

- Once identified, standards should be in place for how data is created, where it is stored, and who has access to it.

- The use of analytics to explore, explain, and exhibit the data is essential.

- Information should be presented in the manner most useful to the user.

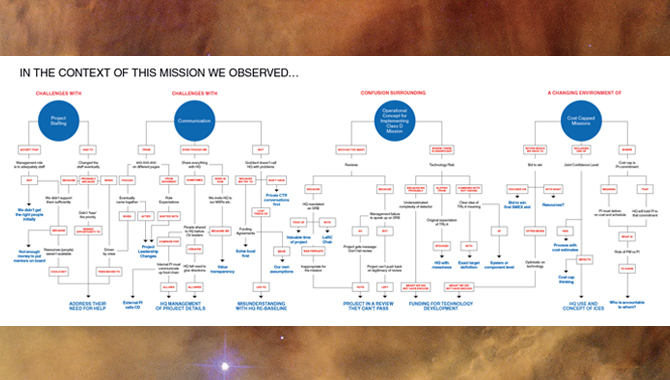

VISUALIZATION

The last phase of our project, in process at the time of this writing, focuses on how the data influences presentation, or, as I call it, data-driven visualization. This is not a new term, but one that took on more meaning for me as the project progressed. Users want more than the common hierarchal list based on keyword relevancy; they also want to visualize connections, themes, and concepts. The advent of social networks, YouTube, and ubiquitous access has transformed the way we find information and the way we learn. A 5-minute video replaces an instruction manual and #stayinformed replaces the storytelling and news. Fortunately, our technology development has kept pace with users’ requirement to visualize data differently. Network analysis, graph databases, HTML5, and text analytics are but a few of the capabilities available to transform the hierarchal list into a conceptual framework that allows our users to start with a natural language query, visualize a network of concepts and themes, and traverse the connections quickly to find their desired answers much more quickly than in the past.

We have applied this approach to a collection of over 2,000 lessons learned, filterable only by date and center. A common complaint we receive from users is how time consuming and difficult it is to search through and collect information from the lessons learned. For example, a keyword search on “pressure valve failure” returns a list of over a hundred documents. A majority of them have only one of the keywords, but a user would have to read through the document to determine if it was relevant to them.

Utilizing a topic-modeling algorithm know as Latent Dirichlet Allocation, we analyzed the documents. Topic modeling provides an algorithmic solution to managing, organizing, and annotating large archival text. The annotations aid in information retrieval, classification, and corpus exploration, which, in the context of topic modeling, uses text analytics to discover concepts’ associations, correlations, etc., drawing out latent themes based on the co-location of terms within the documents.

Topic models provide a simple way to analyze large volumes of unlabeled text. A “topic” consists of a cluster of words that frequently occur together. Using contextual clues, topic models can connect words with similar meanings and distinguish between uses of words with multiple meanings. A topic, which is a probability distribution over the words in the document, is assigned to each document. Documents may be assigned multiple topics, but one topic will have a greater probability. The topic is identified by its associated terms and their probability. Now, when I look at the topic containing “pressure, valve, and failure,” I know there is a higher likelihood that the documents associated with this topic pertain to pressure valve failure and not just one of the words. Additionally, topic modeling in this example drops the number of documents to review to fewer than 20.

Another benefit to this approach is the ability to correlate the topics with each other and with metadata, such as Project Phase, Center, or Safety Issues. By correlating topics to each other, users can quickly expand their investigation to other topics that may have useful ancillary information. We accomplish this by applying data-driven visualization techniques. In our preliminary testing, users have been able to jump easily to a topic of interest, cutting their search time dramatically. We are still doing testing to quantify the time reduction.

More work still needs to be done, but the team is excitedly looking forward to the future. In the next phase, we will be conducting pilot tests of various visualization platforms to determine capabilities, feasibility, and scalability of the systems. Once these tests are completed, we should have a better idea on the requirements necessary to scale the systems up for use across NASA.

David Meza is the Chief Knowledge Architect at NASA Johnson Space Flight Center.